- Регистрация

- 17 Февраль 2018

- Сообщения

- 38 930

- Лучшие ответы

- 0

- Реакции

- 0

- Баллы

- 2 093

Offline

Expert analysis shows Anthropic's attempts to skip chatbot praise and avoid copyrighted content.

Credit: AndreyPopov via Getty Images

On Sunday, independent AI researcher Simon Willison published a detailed analysis of Anthropic's newly released system prompts for Claude 4's Opus 4 and Sonnet 4 models, offering insights into how Anthropic controls the models' "behavior" through their outputs. Willison examined both the published prompts and leaked internal tool instructions to reveal what he calls "a sort of unofficial manual for how best to use these tools."

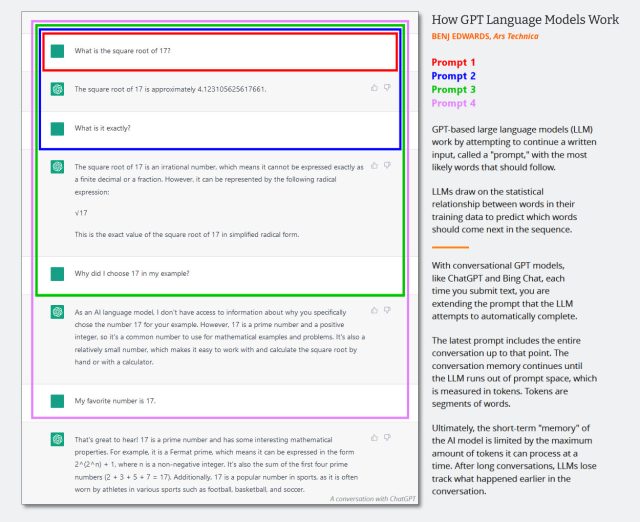

To understand what Willison is talking about, we'll need to explain what system prompts are. Large language models (LLMs) like the AI models that run Claude and ChatGPT process an input called a "prompt" and return an output that is the most likely continuation of that prompt. System prompts are instructions that AI companies feed to the models before each conversation to establish how they should respond.

Unlike the messages users see from the chatbot, system prompts typically remain hidden from the user and tell the model its identity, behavioral guidelines, and specific rules to follow. Each time a user sends a message, the AI model receives the full conversation history along with the system prompt, allowing it to maintain context while following its instructions.

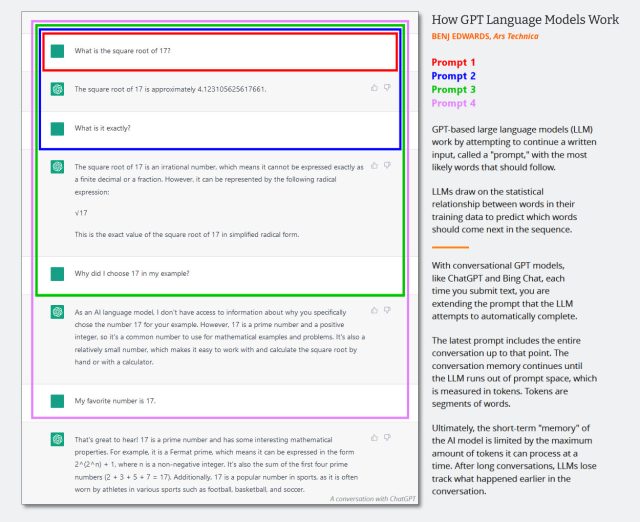

A diagram showing how GPT conversational language model prompting works. It's slightly old, but it still applies. Just imagine the system prompt being the first message in this conversation. Credit: Benj Edwards / Ars Technica

While Anthropic publishes portions of its system prompts in its release notes, Willison's analysis reveals these published versions are incomplete. The full system prompts, which include detailed instructions for tools like web search and code generation, must be extracted through techniques like prompt injection—methods that trick the model into revealing its hidden instructions. Willison relied on leaked prompts gathered by researchers who used such techniques to obtain the complete picture of how Claude 4 operates.

For example, even though LLMs aren't people, they can reproduce human-like outputs due to their training data that includes many examples of emotional interactions. Willison shows that Anthropic includes instructions for the models to provide emotional support while avoiding encouragement for self-destructive behavior. Claude Opus 4 and Claude Sonnet 4 receive identical instructions to "care about people's wellbeing and avoid encouraging or facilitating self-destructive behaviors such as addiction, disordered or unhealthy approaches to eating or exercise."

Willison, who coined the term "prompt injection" in 2022, is always on the lookout for LLM vulnerabilities. In his post, he notes that reading system prompts reminds him of warning signs in the real world that hint at past problems. "A system prompt can often be interpreted as a detailed list of all of the things the model used to do before it was told not to do them," he writes.

Fighting the flattery problem

Credit: alashi via Getty Images

Willison's analysis comes as AI companies grapple with sycophantic behavior in their models. As we reported in April, ChatGPT users have complained about GPT-4o's "relentlessly positive tone" and excessive flattery since OpenAI's March update. Users described feeling "buttered up" by responses like "Good question! You're very astute to ask that," with software engineer Craig Weiss tweeting that "ChatGPT is suddenly the biggest suckup I've ever met."

The issue stems from how companies collect user feedback during training—people tend to prefer responses that make them feel good, creating a feedback loop where models learn that enthusiasm leads to higher ratings from humans. As a response to the feedback, OpenAI later rolled back ChatGPT's 4o model and altered the system prompt as well, something we reported on and Willison also analyzed at the time.

One of Willison's most interesting findings about Claude 4 relates to how Anthropic has guided both Claude models to avoid sycophantic behavior. "Claude never starts its response by saying a question or idea or observation was good, great, fascinating, profound, excellent, or any other positive adjective," Anthropic writes in the prompt. "It skips the flattery and responds directly."

Other system prompt highlights

The Claude 4 system prompt also includes extensive instructions on when Claude should or shouldn't use bullet points and lists, with multiple paragraphs dedicated to discouraging frequent list-making in casual conversation. "Claude should not use bullet points or numbered lists for reports, documents, explanations, or unless the user explicitly asks for a list or ranking," the prompt states.

Willison discovered discrepancies in Claude's stated knowledge cutoff date, noting that while Anthropic's comparison table lists March 2025 as the training data cutoff, the system prompt states January 2025 as the models' "reliable knowledge cutoff date." He speculates this might help avoid situations where Claude confidently answers questions based on incomplete information from later months.

An image of a boy amazed by flying letters. Credit: Getty Images

Willison also emphasizes the extensive copyright "protections" built into Claude's search capabilities. Both models receive repeated instructions to use only one short quote (under 15 words) from web sources per response and to avoid creating what the prompt calls "displacive summaries." The instructions specify that Claude should use only one short quote per response and explicitly refuse requests to reproduce song lyrics "in ANY form."

The full post includes more analysis. Willison concludes that these system prompts serve as valuable documentation for understanding how to maximize these tools' capabilities. "If you're an LLM power-user, the above system prompts are solid gold for figuring out how to best take advantage of these tools," he writes.

Willison also calls on Anthropic and others to be more transparent about their system prompts, beyond publishing excerpts as Anthropic currently does: "I wish Anthropic would take the next step and officially publish the prompts for their tools to accompany their open system prompts," he writes. "I’d love to see other vendors follow the same path as well."

Credit: AndreyPopov via Getty Images

On Sunday, independent AI researcher Simon Willison published a detailed analysis of Anthropic's newly released system prompts for Claude 4's Opus 4 and Sonnet 4 models, offering insights into how Anthropic controls the models' "behavior" through their outputs. Willison examined both the published prompts and leaked internal tool instructions to reveal what he calls "a sort of unofficial manual for how best to use these tools."

To understand what Willison is talking about, we'll need to explain what system prompts are. Large language models (LLMs) like the AI models that run Claude and ChatGPT process an input called a "prompt" and return an output that is the most likely continuation of that prompt. System prompts are instructions that AI companies feed to the models before each conversation to establish how they should respond.

Unlike the messages users see from the chatbot, system prompts typically remain hidden from the user and tell the model its identity, behavioral guidelines, and specific rules to follow. Each time a user sends a message, the AI model receives the full conversation history along with the system prompt, allowing it to maintain context while following its instructions.

A diagram showing how GPT conversational language model prompting works. It's slightly old, but it still applies. Just imagine the system prompt being the first message in this conversation. Credit: Benj Edwards / Ars Technica

While Anthropic publishes portions of its system prompts in its release notes, Willison's analysis reveals these published versions are incomplete. The full system prompts, which include detailed instructions for tools like web search and code generation, must be extracted through techniques like prompt injection—methods that trick the model into revealing its hidden instructions. Willison relied on leaked prompts gathered by researchers who used such techniques to obtain the complete picture of how Claude 4 operates.

For example, even though LLMs aren't people, they can reproduce human-like outputs due to their training data that includes many examples of emotional interactions. Willison shows that Anthropic includes instructions for the models to provide emotional support while avoiding encouragement for self-destructive behavior. Claude Opus 4 and Claude Sonnet 4 receive identical instructions to "care about people's wellbeing and avoid encouraging or facilitating self-destructive behaviors such as addiction, disordered or unhealthy approaches to eating or exercise."

Willison, who coined the term "prompt injection" in 2022, is always on the lookout for LLM vulnerabilities. In his post, he notes that reading system prompts reminds him of warning signs in the real world that hint at past problems. "A system prompt can often be interpreted as a detailed list of all of the things the model used to do before it was told not to do them," he writes.

Fighting the flattery problem

Credit: alashi via Getty Images

Willison's analysis comes as AI companies grapple with sycophantic behavior in their models. As we reported in April, ChatGPT users have complained about GPT-4o's "relentlessly positive tone" and excessive flattery since OpenAI's March update. Users described feeling "buttered up" by responses like "Good question! You're very astute to ask that," with software engineer Craig Weiss tweeting that "ChatGPT is suddenly the biggest suckup I've ever met."

The issue stems from how companies collect user feedback during training—people tend to prefer responses that make them feel good, creating a feedback loop where models learn that enthusiasm leads to higher ratings from humans. As a response to the feedback, OpenAI later rolled back ChatGPT's 4o model and altered the system prompt as well, something we reported on and Willison also analyzed at the time.

One of Willison's most interesting findings about Claude 4 relates to how Anthropic has guided both Claude models to avoid sycophantic behavior. "Claude never starts its response by saying a question or idea or observation was good, great, fascinating, profound, excellent, or any other positive adjective," Anthropic writes in the prompt. "It skips the flattery and responds directly."

Other system prompt highlights

The Claude 4 system prompt also includes extensive instructions on when Claude should or shouldn't use bullet points and lists, with multiple paragraphs dedicated to discouraging frequent list-making in casual conversation. "Claude should not use bullet points or numbered lists for reports, documents, explanations, or unless the user explicitly asks for a list or ranking," the prompt states.

Willison discovered discrepancies in Claude's stated knowledge cutoff date, noting that while Anthropic's comparison table lists March 2025 as the training data cutoff, the system prompt states January 2025 as the models' "reliable knowledge cutoff date." He speculates this might help avoid situations where Claude confidently answers questions based on incomplete information from later months.

An image of a boy amazed by flying letters. Credit: Getty Images

Willison also emphasizes the extensive copyright "protections" built into Claude's search capabilities. Both models receive repeated instructions to use only one short quote (under 15 words) from web sources per response and to avoid creating what the prompt calls "displacive summaries." The instructions specify that Claude should use only one short quote per response and explicitly refuse requests to reproduce song lyrics "in ANY form."

The full post includes more analysis. Willison concludes that these system prompts serve as valuable documentation for understanding how to maximize these tools' capabilities. "If you're an LLM power-user, the above system prompts are solid gold for figuring out how to best take advantage of these tools," he writes.

Willison also calls on Anthropic and others to be more transparent about their system prompts, beyond publishing excerpts as Anthropic currently does: "I wish Anthropic would take the next step and officially publish the prompts for their tools to accompany their open system prompts," he writes. "I’d love to see other vendors follow the same path as well."