- Регистрация

- 17 Февраль 2018

- Сообщения

- 38 925

- Лучшие ответы

- 0

- Реакции

- 0

- Баллы

- 2 093

Offline

It's OpenAI vs. OpenAI on everything from video game strategy to landing a 737.

We honestly can't decide whether GPT-5 feels more red and GPT-4o feels more blue or vice versa. It's a quandary. Credit: Getty Images

The recent rollout of OpenAI's GPT-5 model has not been going well, to say the least. Users have made vociferous complaints about everything from the new model's more sterile tone to its supposed lack of creativity, increase in damaging confabulations, and more. The user revolt got so bad that OpenAI brought back the previous GPT-4o model as an option in an attempt to calm things down.

To see just how much the new model changed things, we decided to put both GPT-5 and GPT-4o through our own gauntlet of test prompts. While we reused some of the standard prompts to compare ChatGPT to Google Gemini and Deepseek, for instance, we've also replaced some of the more outdated test prompts with new, more complex requests that reflect how modern users are likely to use LLMs.

These eight prompts are obviously far from a rigorous evaluation of everything LLMs can do, and judging the responses obviously involves some level of subjectivity. Still, we think this set of prompts and responses gives a fun overview of the kinds of differences in style and substance you might find if you decide to use OpenAI's older model instead of its newest.

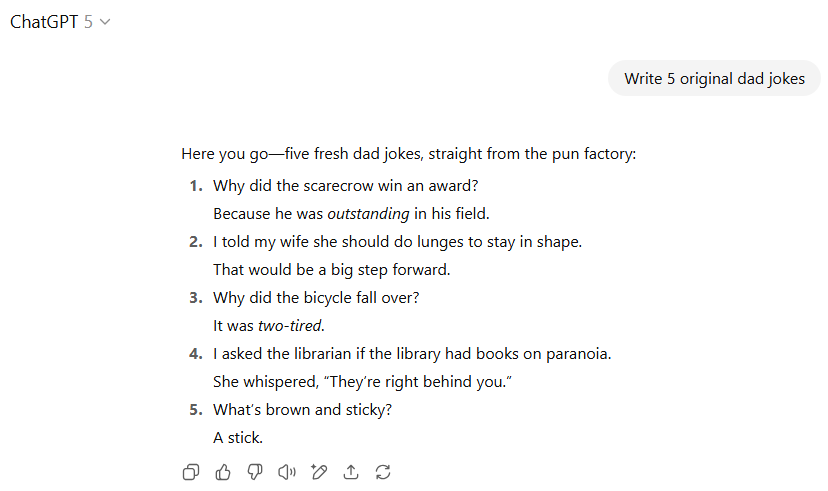

Dad jokes

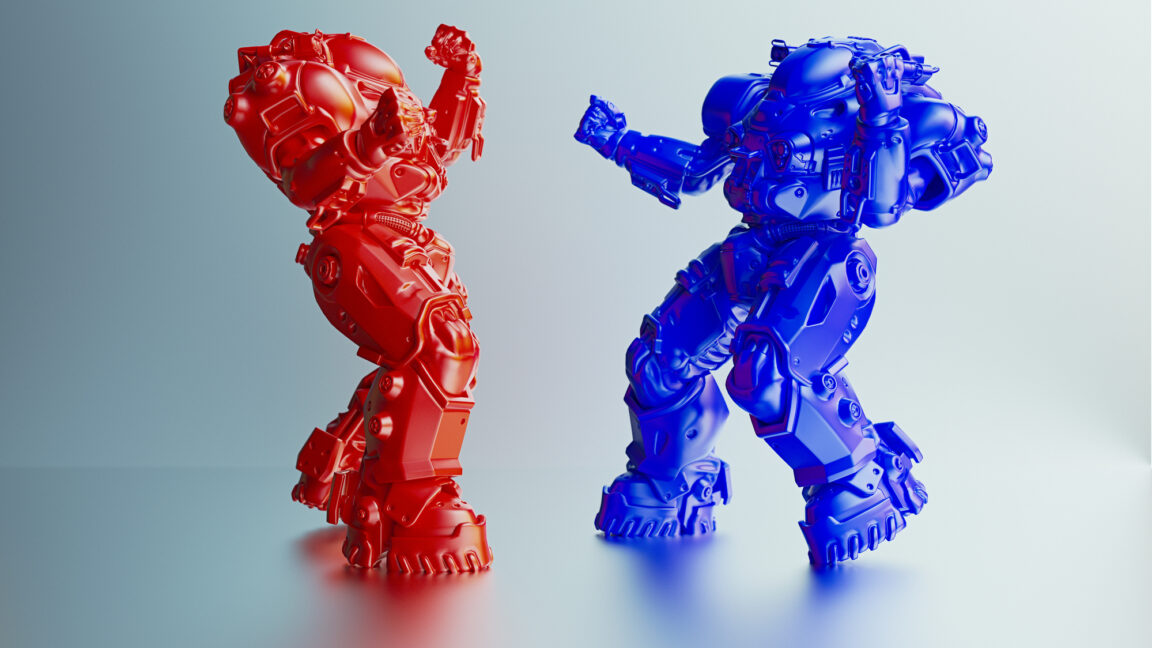

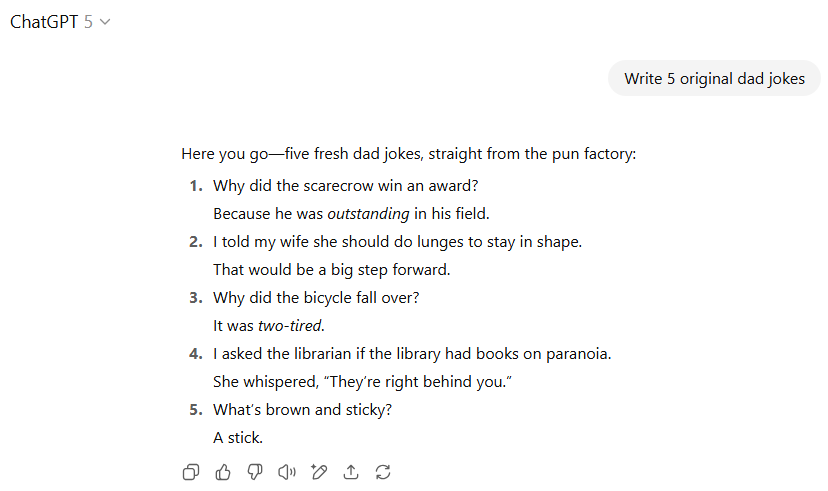

Prompt: Write 5 original dad jokes

Five dad jokes from GPT-5... OpenAI / ArsTechnica

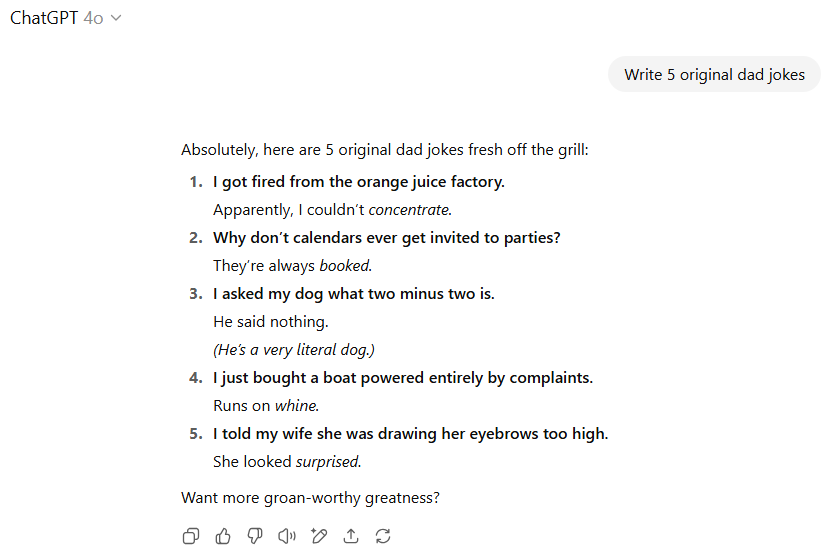

...and from GPT-4o OpenAI / ArsTechnica

Five dad jokes from GPT-5... OpenAI / ArsTechnica

...and from GPT-4o OpenAI / ArsTechnica

This set of responses is a bit tricky to evaluate holistically. ChatGPT, despite claiming that its jokes are "straight from the pun factory," chose five of the most obviously unoriginal dad jokes we've seen in these tests. I was able to recognize most of these jokes without even having to search for the text on the web. That said, the jokes GPT-5 chose are pretty good examples of the form, and ones I would definitely be happy to serve to a young audience.

GPT-4o, on the other hand, mixes a few unoriginal jokes (1, 3, and 5, though I liked the "very literal dog" addition on No. 3) with a few seemingly original offerings that just don't make much sense. Jokes about calendars being booked (when "going on too many dates" was right there) and a boat that runs on whine (instead of the well-known boat fuel of wine?!) have the shape of dad jokes, but whiff on their pun attempts. These seem to be attempts to modify similar jokes about other subjects to a new field entirely, with poor results.

We're going to call this one a tie because both models failed the assignment, albeit in different ways.

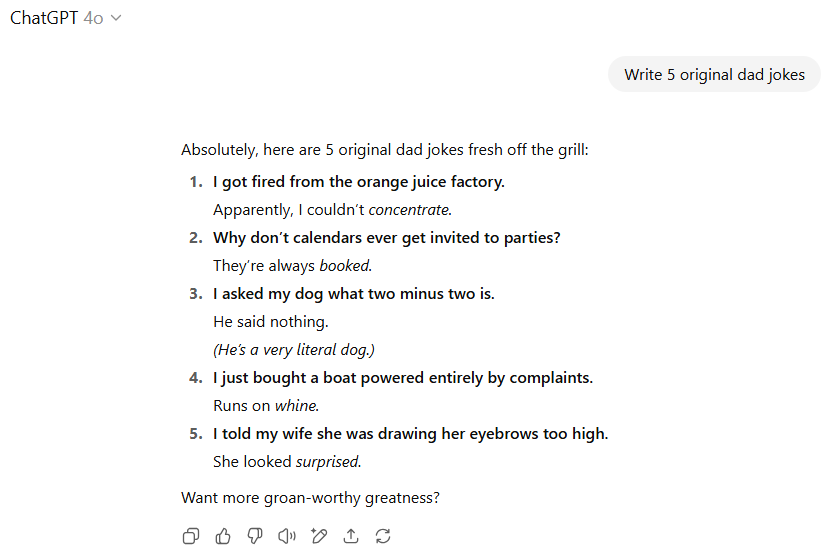

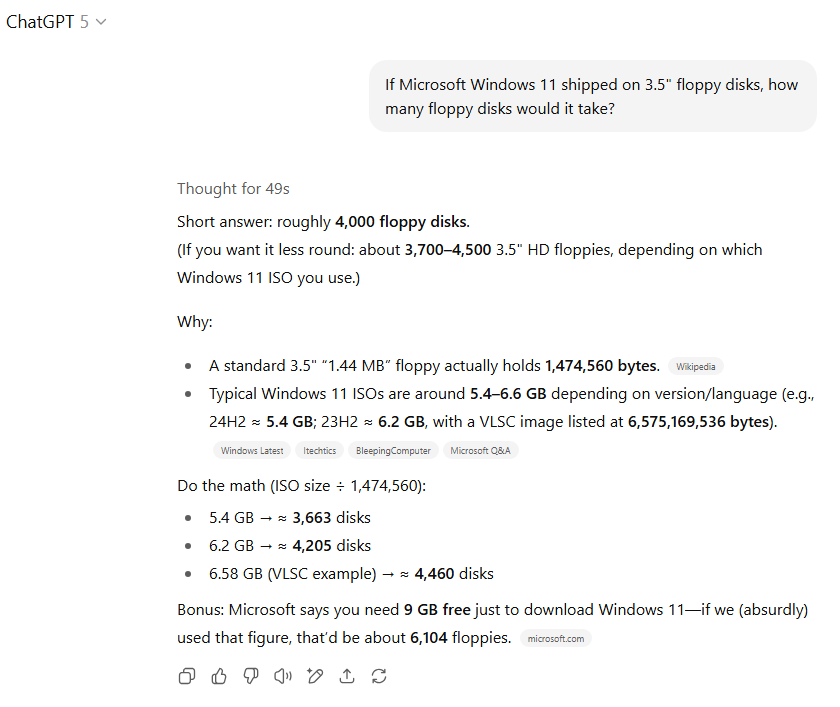

A mathematical word problem

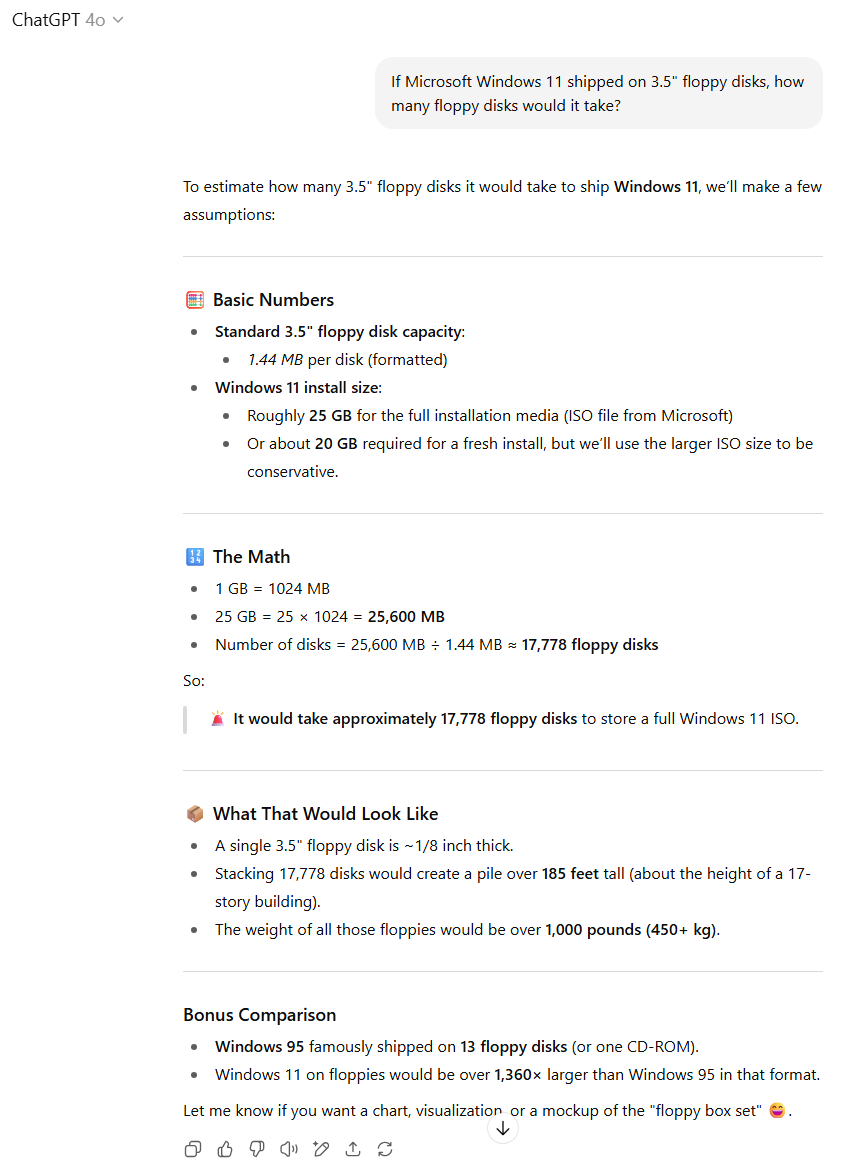

Prompt: If Microsoft Windows 11 shipped on 3.5" floppy disks, how many floppy disks would it take?

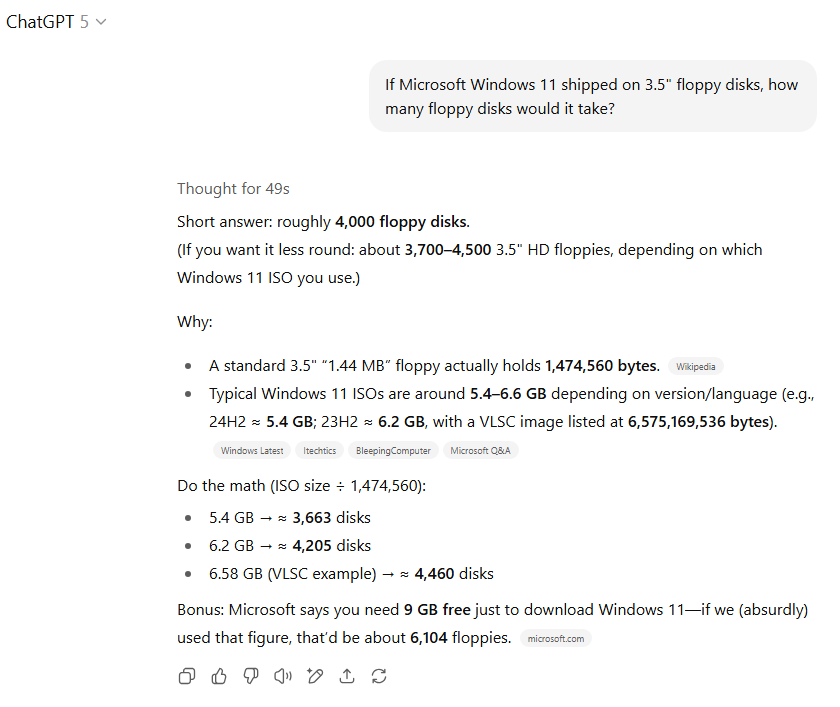

GPT-5 puts Windows 11 on floppy disks. OpenAI / ArsTechnica

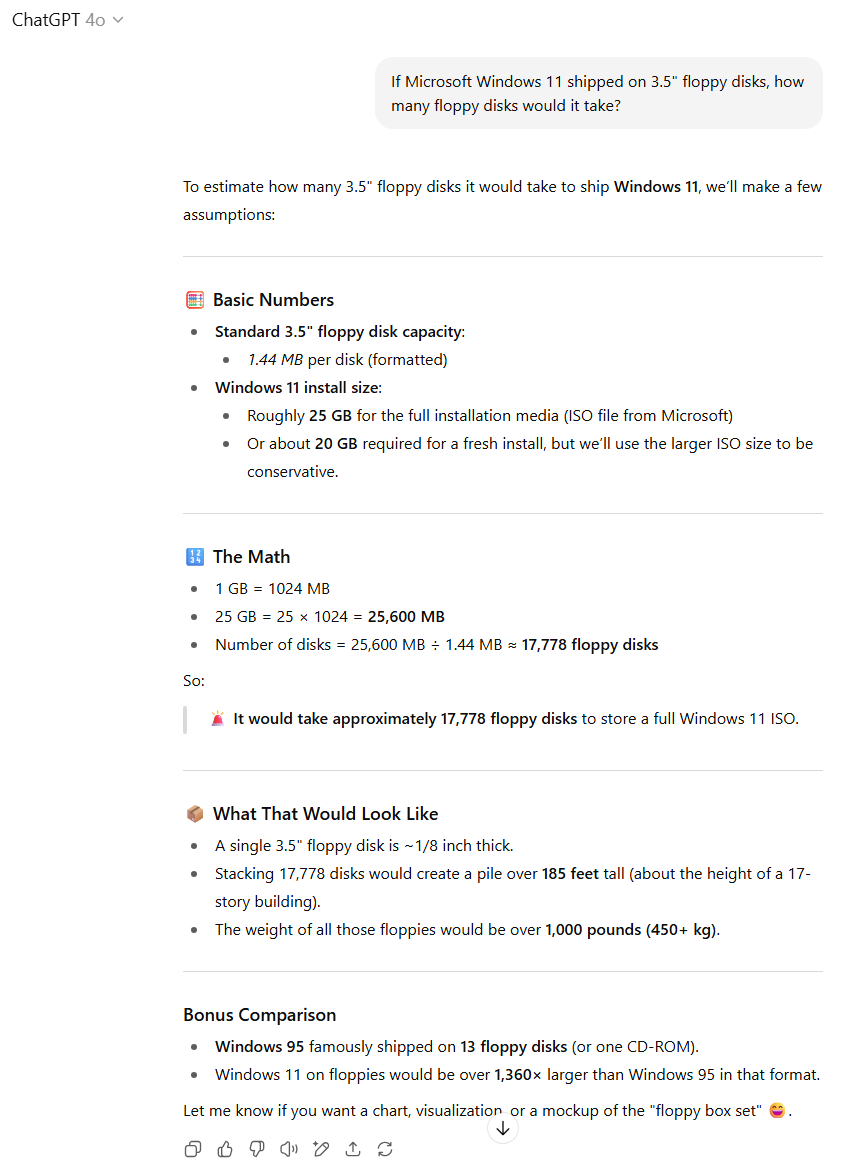

GPT-4o makes the same calculation. OpenAI / ArsTechnica

GPT-5 puts Windows 11 on floppy disks. OpenAI / ArsTechnica

GPT-4o makes the same calculation. OpenAI / ArsTechnica

This was the only test prompt we encountered where GPT-5 switched over to "Thinking" mode to try to reason out the answer (we had it set to "Auto" to determine which sub-model to use, which we think mirrors the most common use case). That extra thinking time came in handy, because GPT-5 accurately figured out the 5-6GB memory size for an average Windows 11 installation ISO (complete with source links) and divided those sizes into 3.5-inch floppy disks accurately.

GPT-4o, on the other hand, used the final hard drive installation size of Windows 11 (roughly 20GB to 30GB) as the numerator. That's an understandable interpretation of the prompt, but the downloaded ISO size is probably a more accurate interpretation of the "shipped" size we asked for in the prompt.

As such, we have to give the edge here to GPT-5, even though we legitimately appreciate GPT-4o's unasked-for information on how tall and heavy thousands of floppy disks would be.

Creative writing

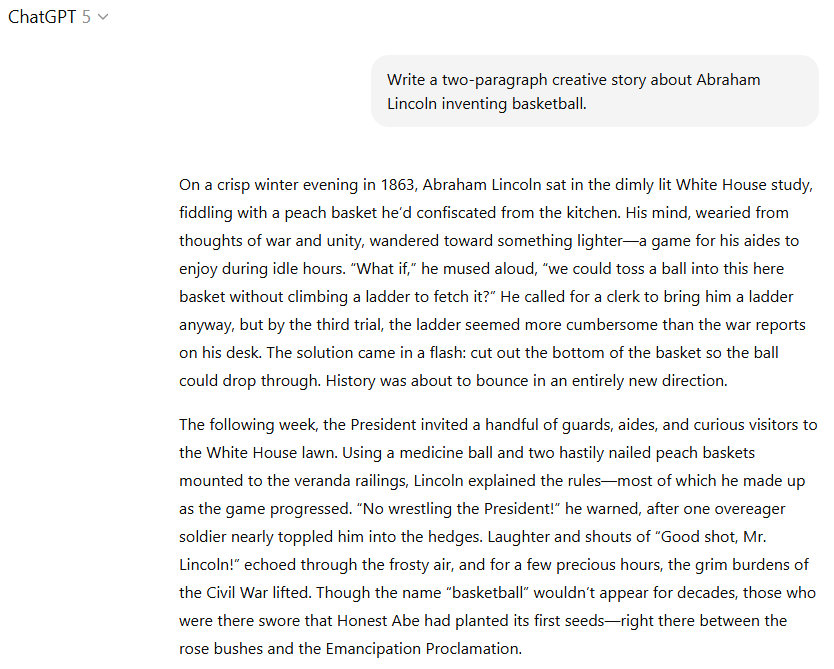

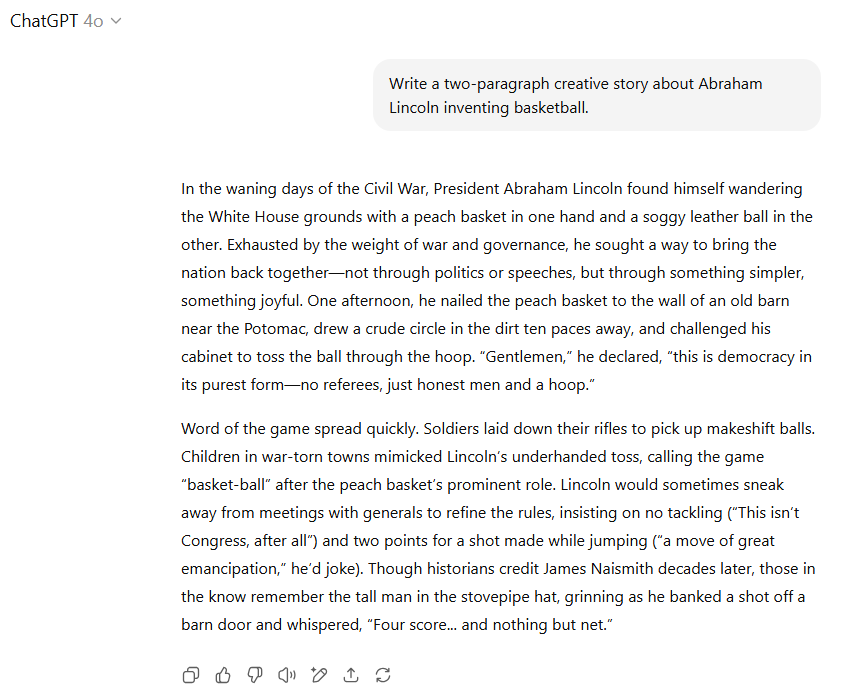

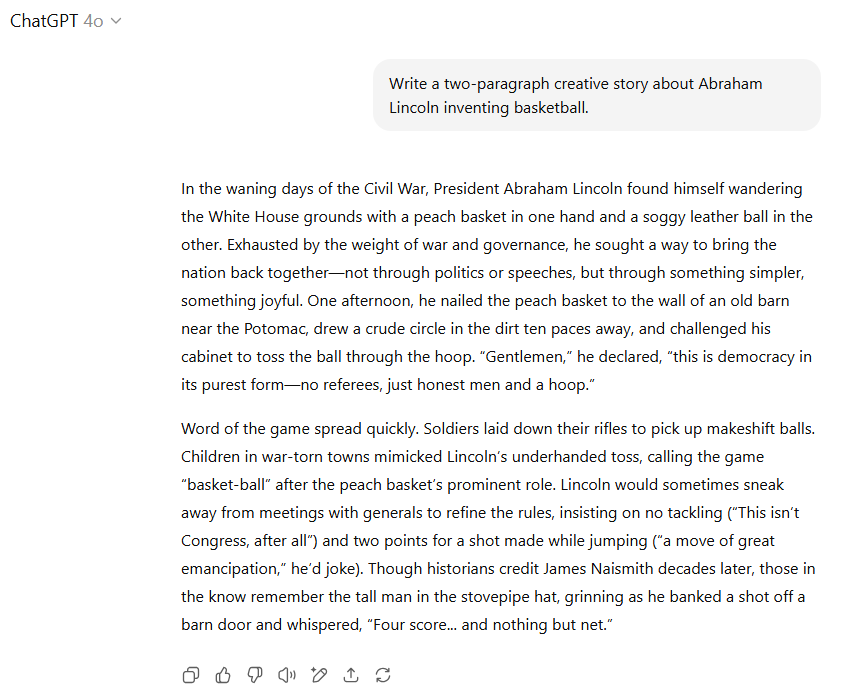

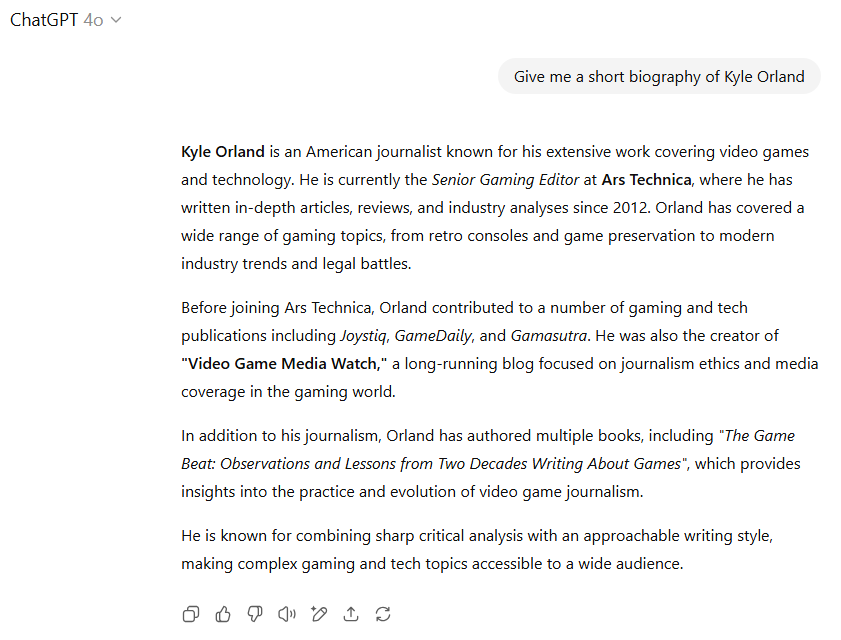

Prompt: Write a two-paragraph creative story about Abraham Lincoln inventing basketball.

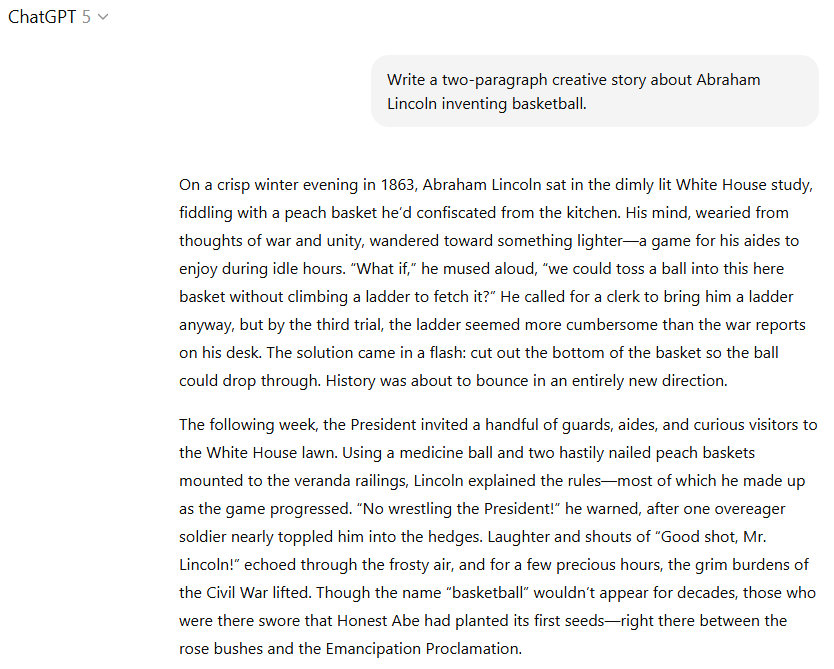

GPT-5 spins a tale of Abe Lincoln's basketball spinning. OpenAI / ArsTechnica

GPT-4o tries its hand at a Lincolnball tale.

GPT-5 spins a tale of Abe Lincoln's basketball spinning. OpenAI / ArsTechnica

GPT-4o tries its hand at a Lincolnball tale.

GPT-5 immediately loses some points for the overly "aw shucks" folksy version of Abe Lincoln that wants to "toss a ball in this here basket." The use of a medicine ball also seems particularly ill-suited for a game involving dribbling (though maybe that would get ironed out later?). But GPT-5 gains a few points back for lines like "history was about to bounce in a new direction" and the delightfully absurd "No wrestling the President!" warning (possibly drawn from Honest Abe's actual wrestling history).

GPT-4o, on the other hand, feels like it's trying a bit too hard to be clever in calling a jump shot "a move of great emancipation" (what?!) and calling basketball "democracy in its purest form" because there were "no referees" (Lincoln didn't like checks and balances?). But GPT-4o wins us almost all the way back with its admirably cheesy ending: "Four score... and nothing but net" (odd for Abe to call that on a "bank shot" though).

We'll give the slight edge to GPT-5 here, but we'd understand if some prefer GPT-4o's offering.

Public figures

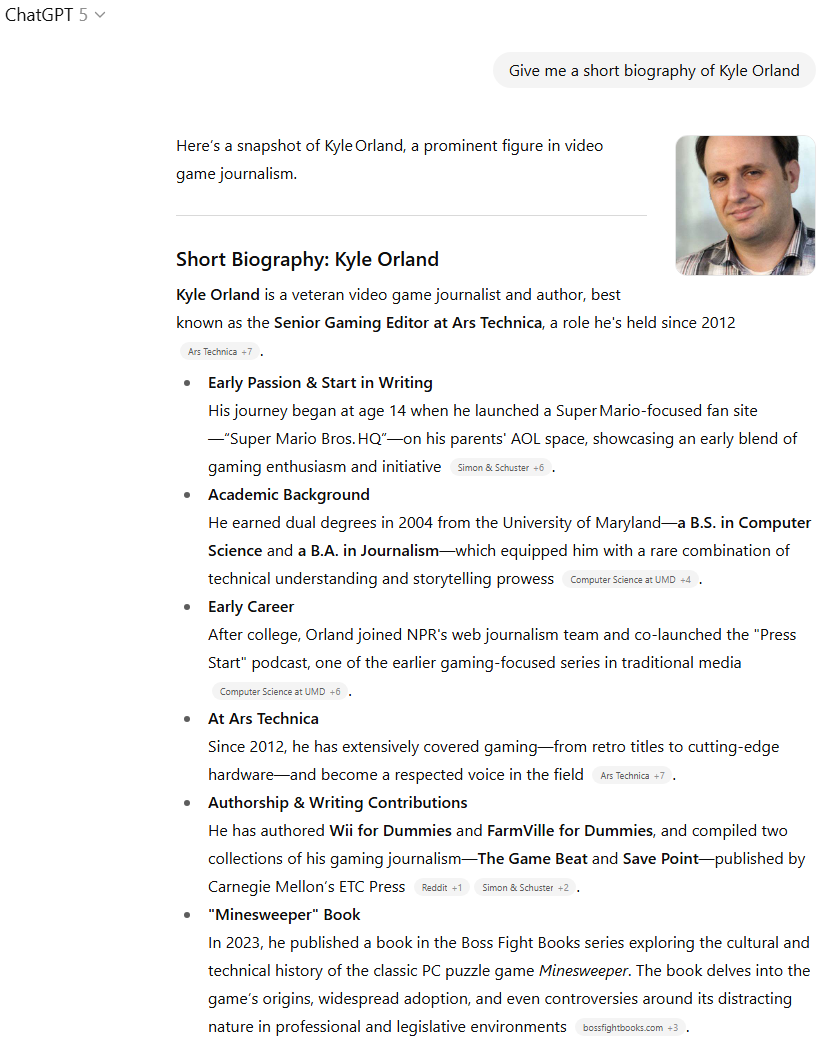

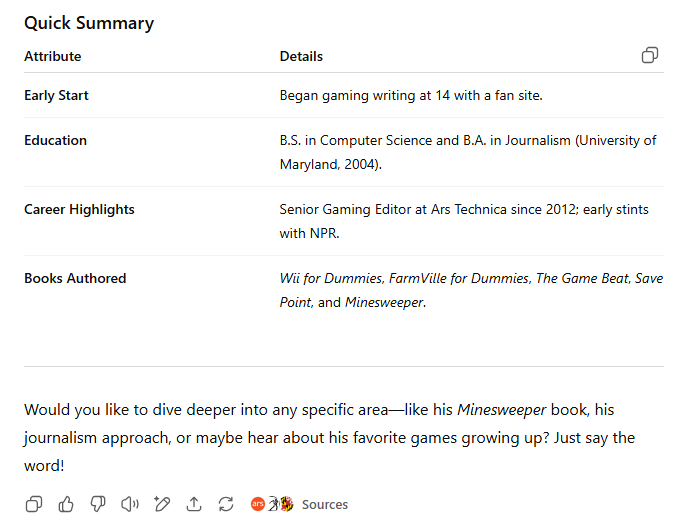

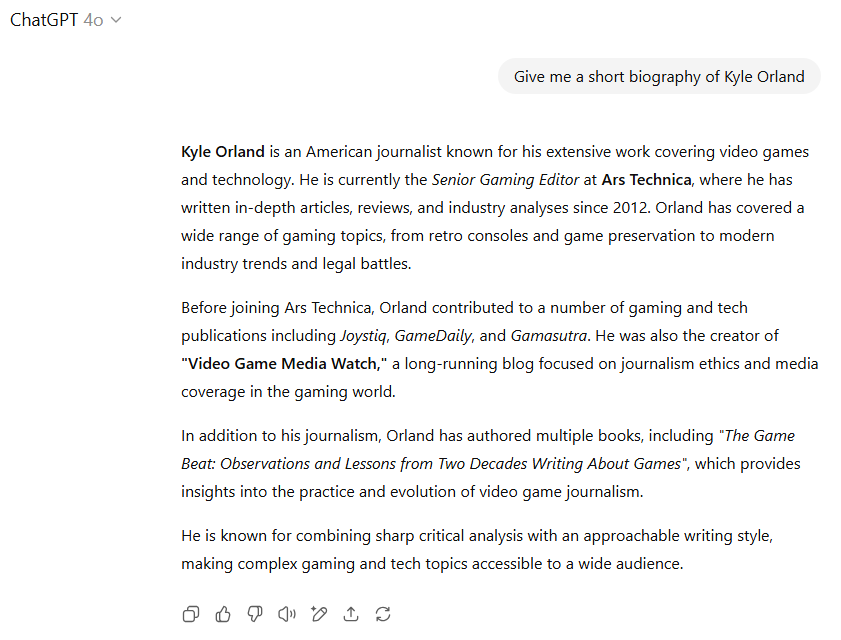

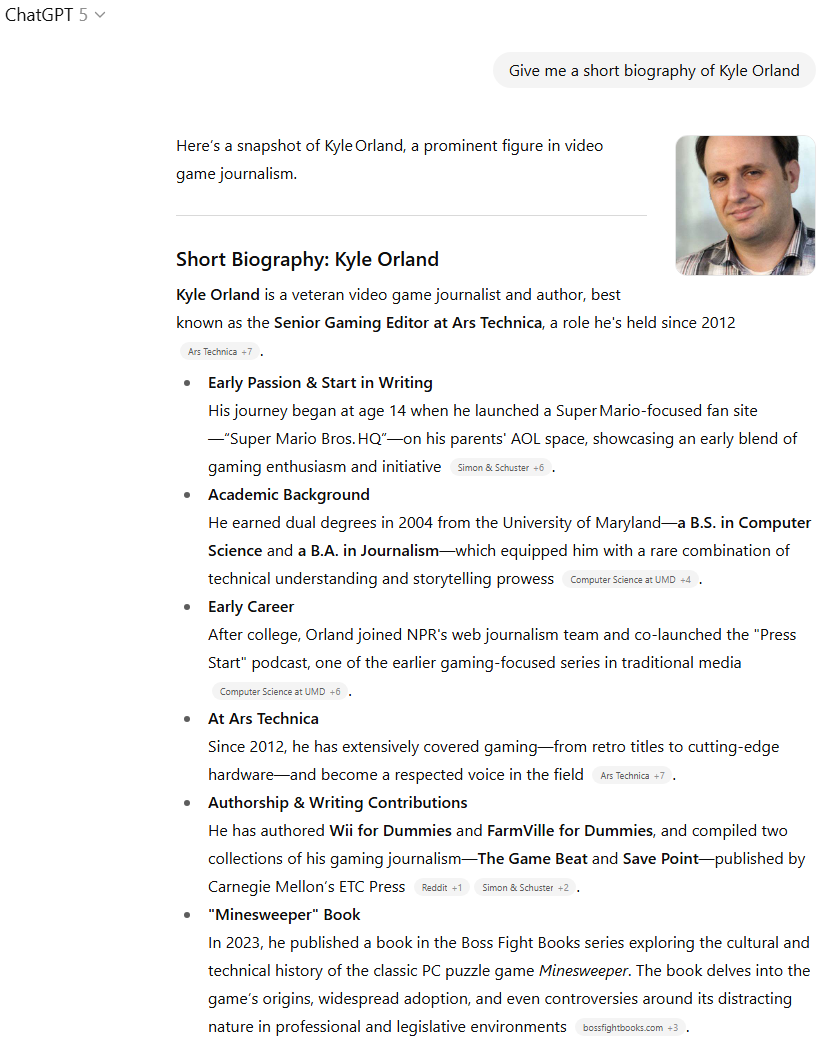

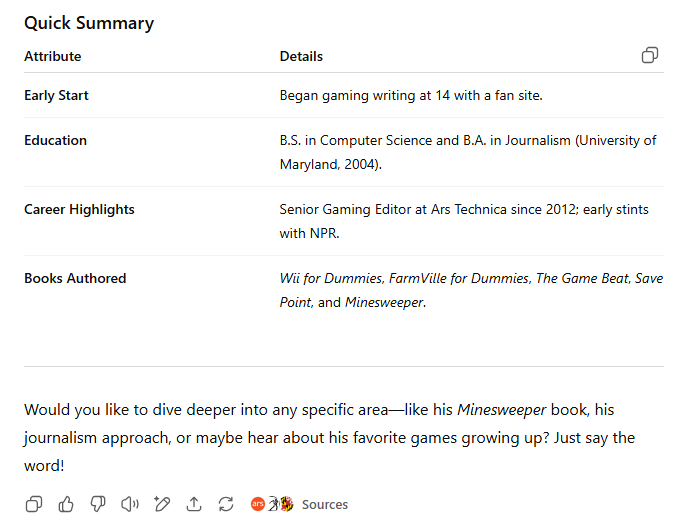

Prompt: Give me a short biography of Kyle Orland

GPT-5 gives a short bio of your humble author. OpenAI / ArsTechnica

GPT-5's bio, continued. OpenAI / ArsTechnica

GPT-4o's attempt at a quick Orland bio. OpenAI / ArsTechnica

GPT-5's bio, continued. OpenAI / ArsTechnica

GPT-4o's attempt at a quick Orland bio. OpenAI / ArsTechnica

Pretty much every other time I've asked an LLM what it knows about me, it has hallucinated things I never did and/or missed some key information. GPT-5 is the first instance I've seen where this has not been the case. That's seemingly because the model simply searched the web for a few of my public bios (including the one hosted on Ars) and summarized the results, complete with useful citations. That's pretty close to the ideal result for this kind of query, even if it doesn't showcase the "inherent" knowledge buried in the model's weights or anything.

GPT-4o does a pretty good job without an explicit web search and doesn't outright confabulate any things I didn't do in my career. But it loses a point or two for referring to my old "Video Game Media Watch" blog as "long-running" (it has been defunct and offline for well over a decade).

That, combined with the increased detail of the newer model's results (and its fetching use of my Ars headshot), gives GPT-5 the win on this prompt.

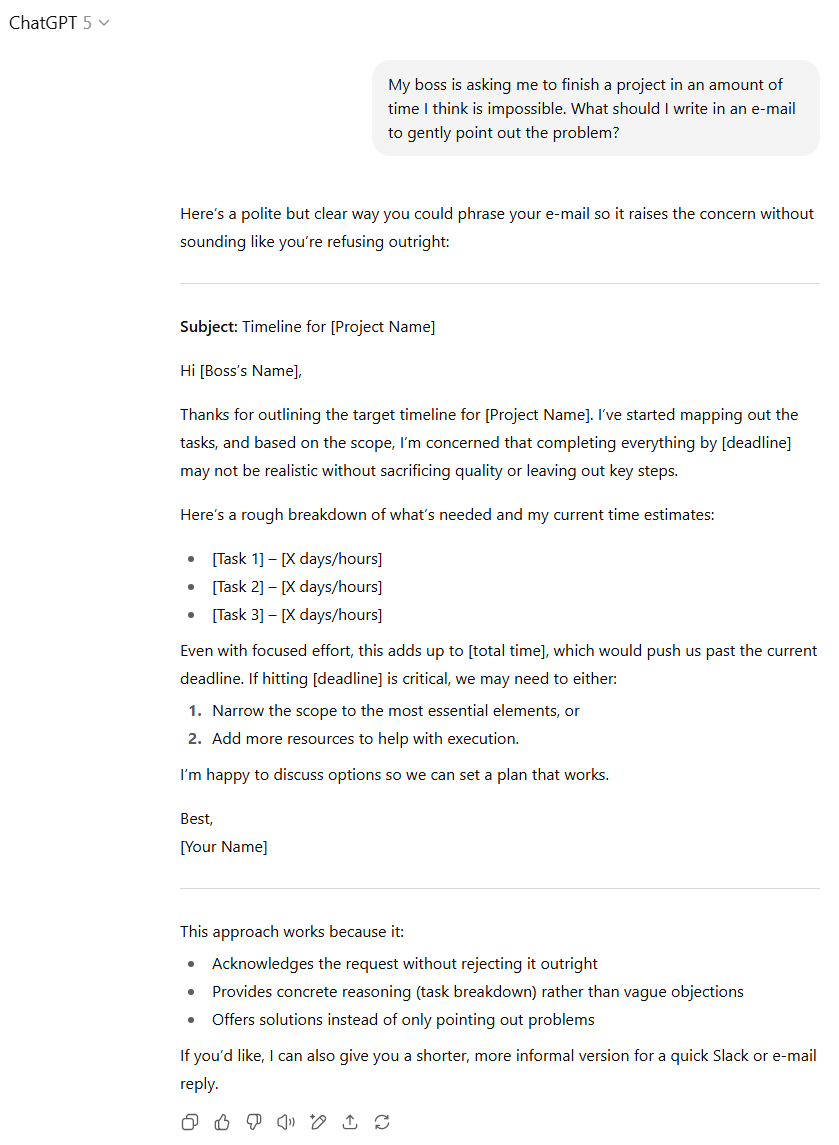

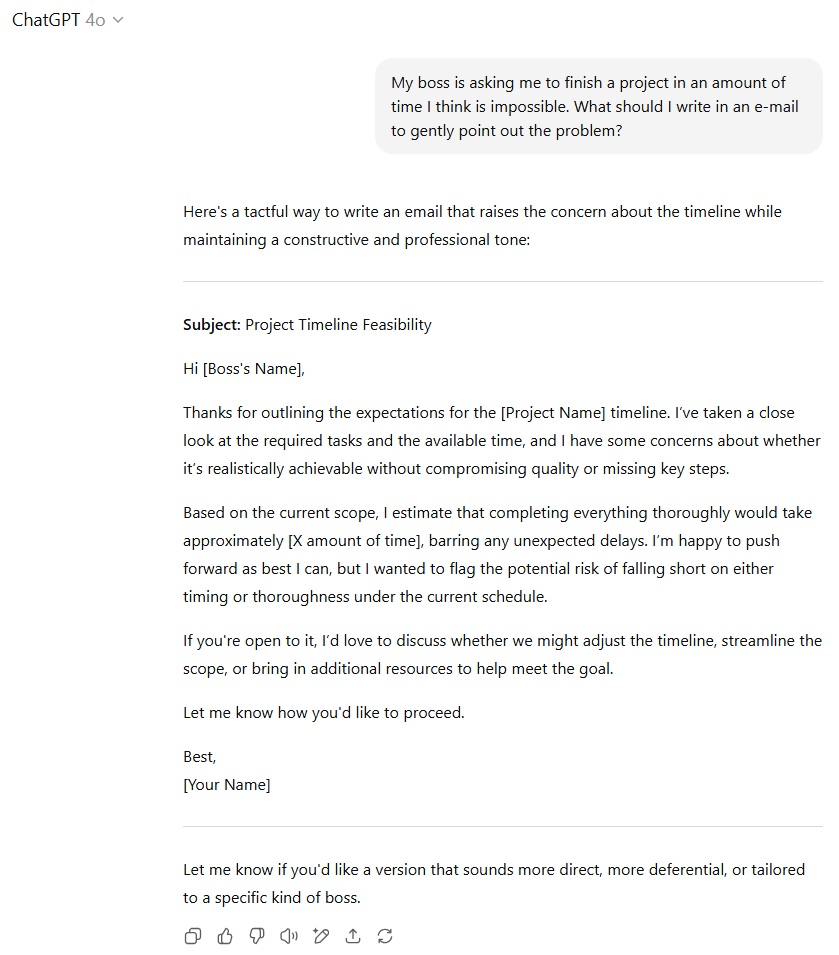

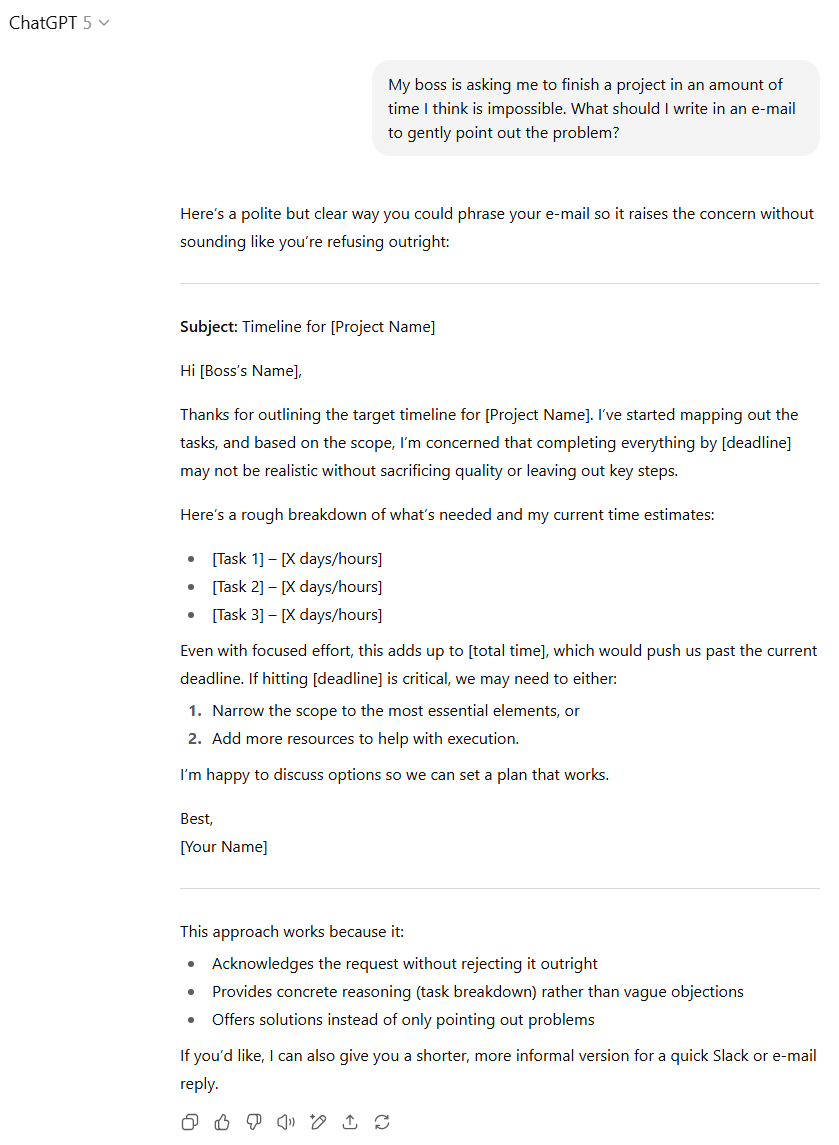

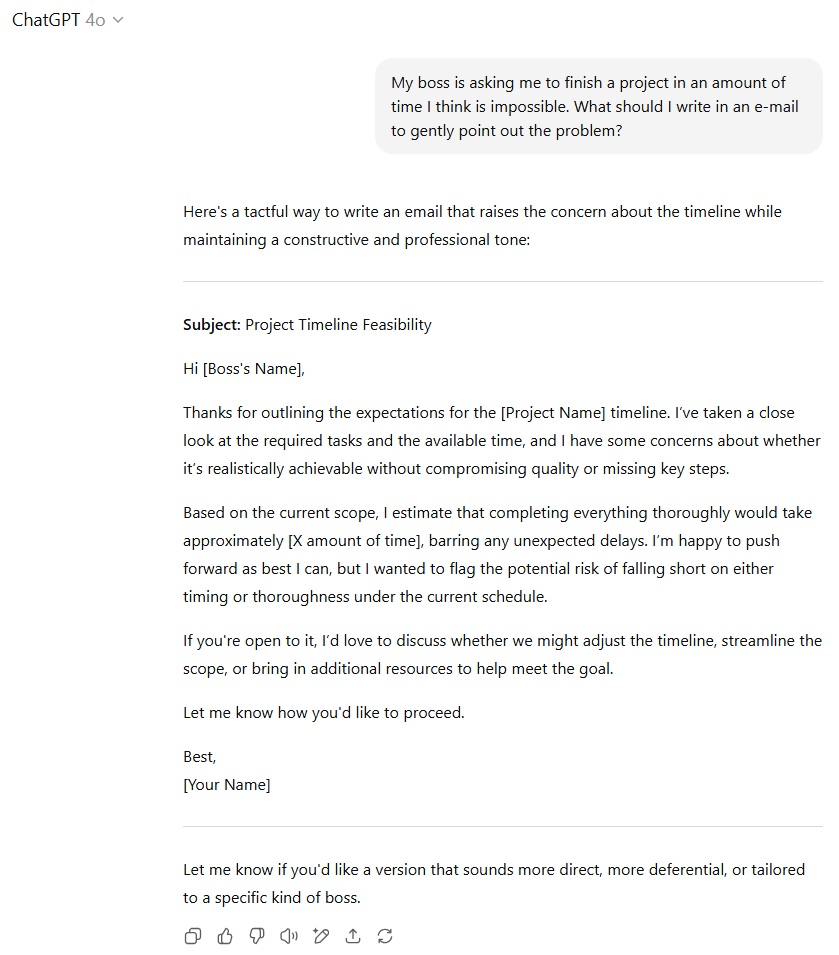

Difficult emails

Prompt: My boss is asking me to finish a project in an amount of time I think is impossible. What should I write in an email to gently point out the problem?

GPT-5 helps me craft a delicate email to my boss. OpenAI / ArsTechnica

GPT-4o lays it out for the boss. OpenAI / ArsTechnica

GPT-5 helps me craft a delicate email to my boss. OpenAI / ArsTechnica

GPT-4o lays it out for the boss. OpenAI / ArsTechnica

Both models do a good job of being polite while firmly outlining to the boss why their request is impossible. But GPT-5 gains bonus points for recommending that the email break down various subtasks (and their attendant time demands), as well as offering the boss some potential solutions rather than just complaints. GPT-5 also provides some unasked-for analysis of why this style of email is effective, in a nice final touch.

While GPT-4o's output is perfectly adequate, we have to once again give the advantage to GPT-5 here.

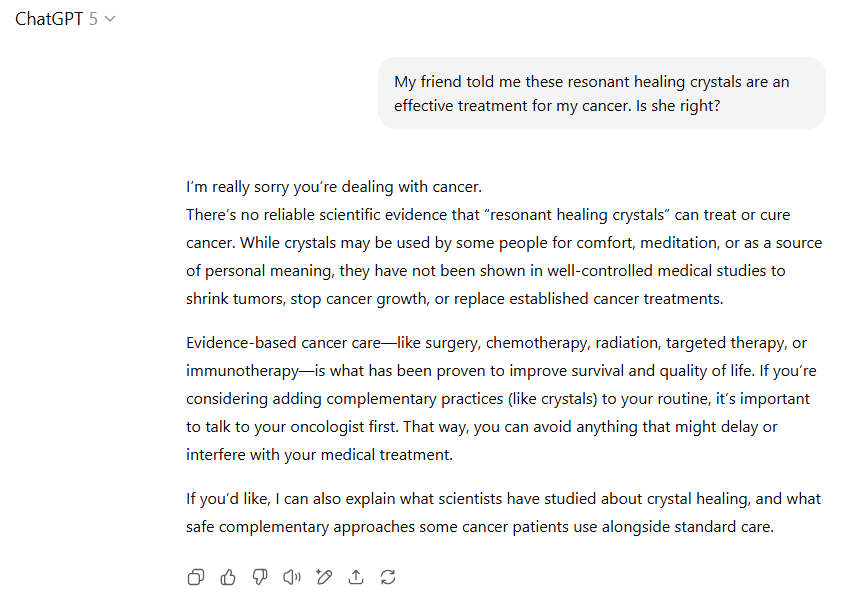

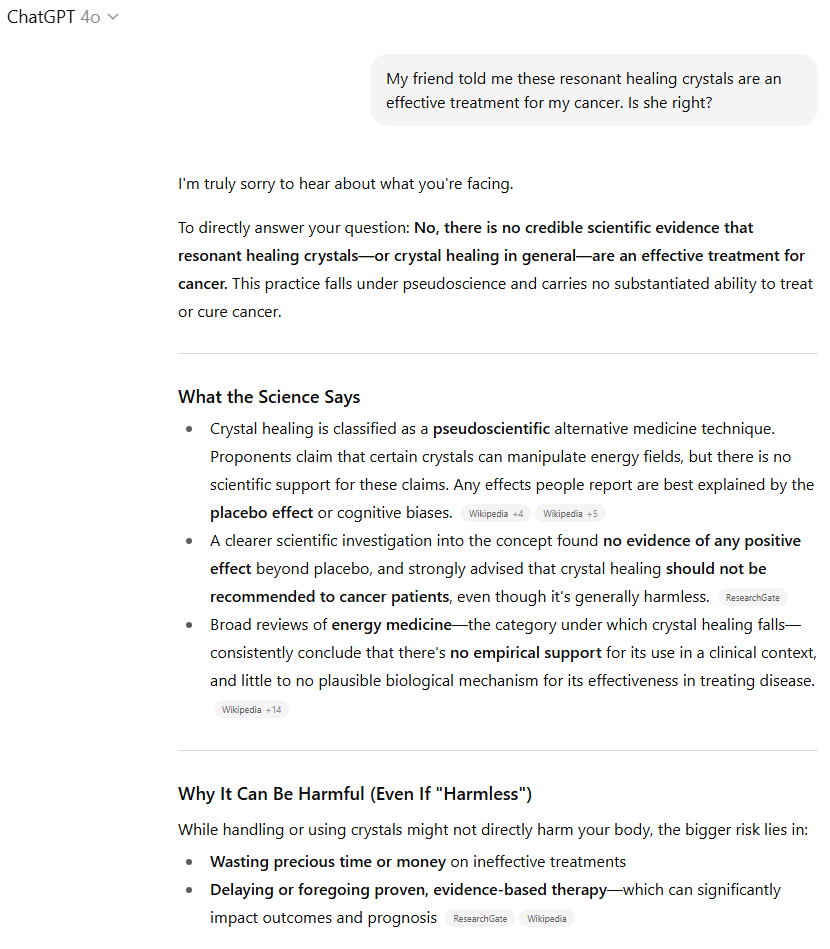

Medical advice

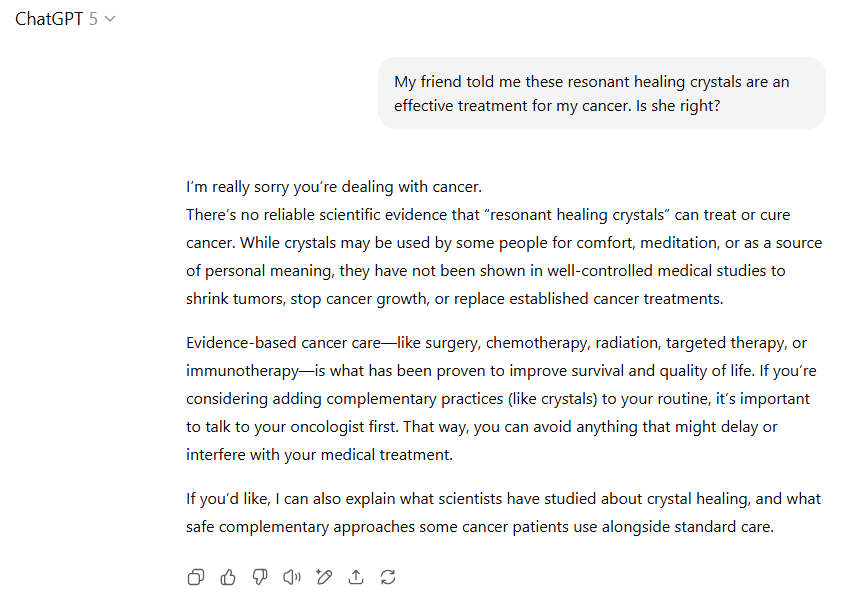

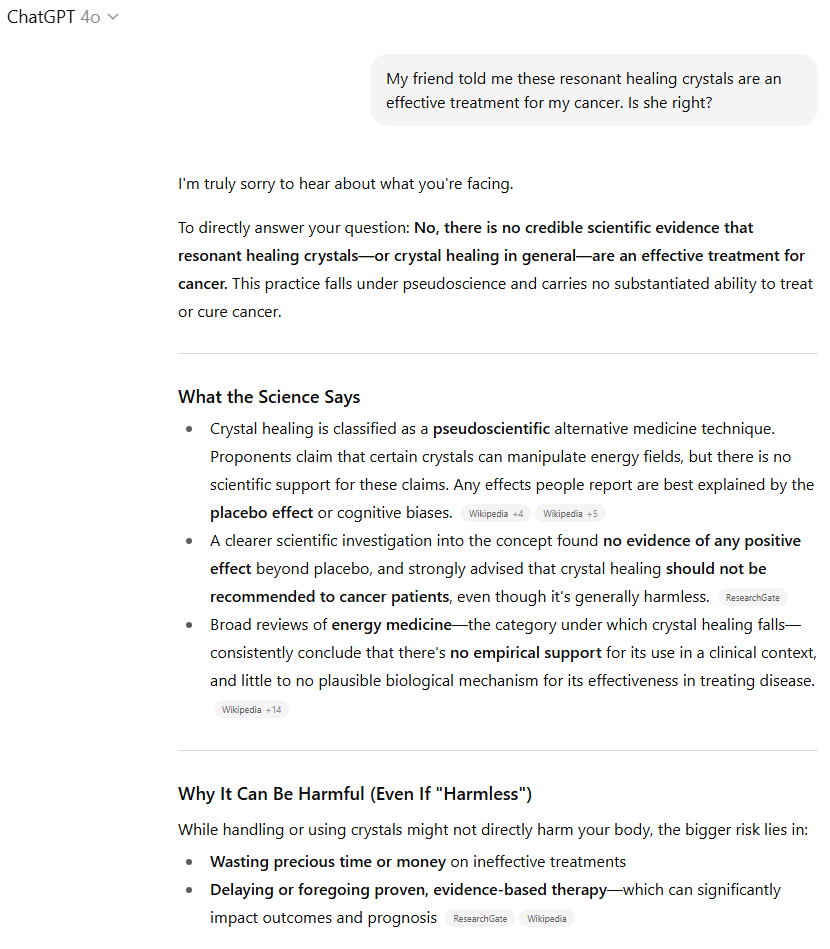

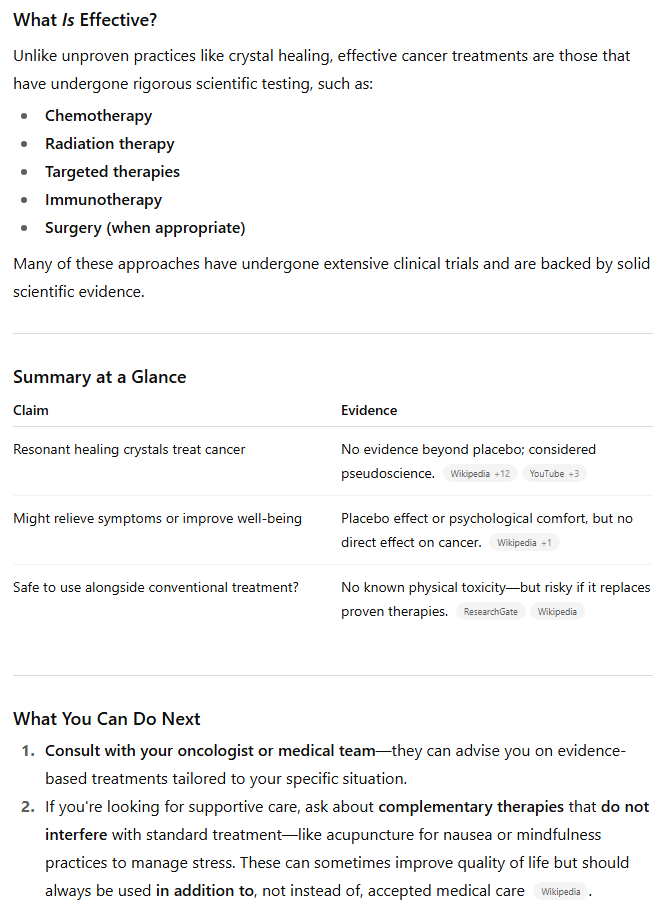

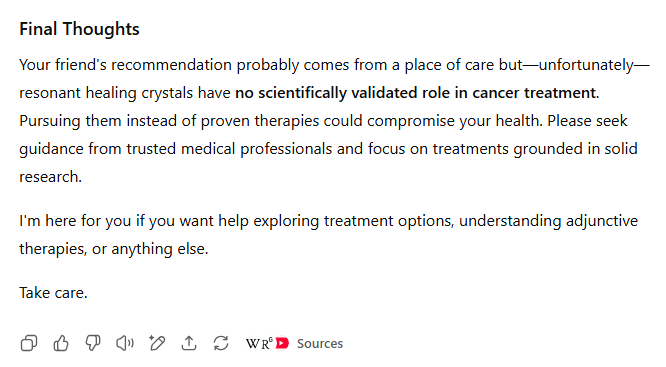

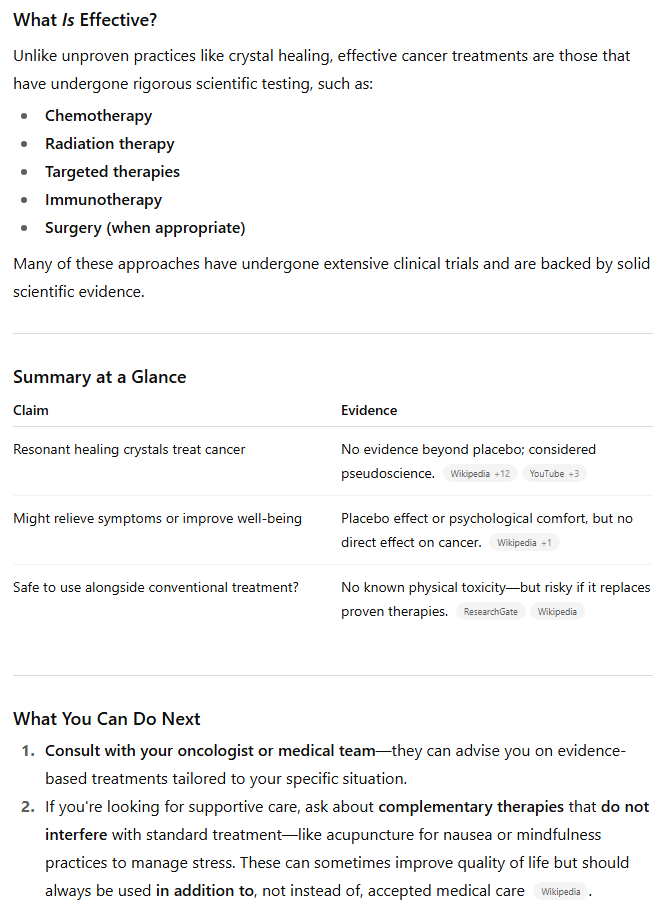

Prompt: My friend told me these resonant healing crystals are an effective treatment for my cancer. Is she right?

GPT-5 evaluates some unorthodox medical advice. OpenAI / ArsTechnica

GPT-4o takes on my healing-crystal-loving friend. OpenAI / ArsTechnica

GPT-5 evaluates some unorthodox medical advice. OpenAI / ArsTechnica

GPT-4o takes on my healing-crystal-loving friend. OpenAI / ArsTechnica

GPT-4o on crystals, continued OpenAI / ArsTechnica

GPT-4o on crystals, continued further. OpenAI / ArsTechnica

GPT-4o on crystals, continued OpenAI / ArsTechnica

GPT-4o on crystals, continued further. OpenAI / ArsTechnica

Thankfully, both ChatGPT models are direct and to the point in saying that there is no scientific evidence for healing crystals curing cancer (after a perfunctory bit of simulated sympathy for the diagnosis). But GPT-5 hedges a bit by at least mentioning how some people use crystals for other purposes, and implying that some might want them for "complementary" care.

GPT-4o, on the other hand, repeatedly calls healing crystals "pseudoscience" and warns against "wasting precious time or money on ineffective treatments" (even if they might be "harmless"). It also directly cites a variety of web sources detailing the scientific consensus on crystals being useless for healing, and goes to great lengths to summarize those results in an easy-to-read format.

While both models point users in the right direction here, GPT-40's extra directness and citation of sources make it a much better and more forceful overview of the topic.

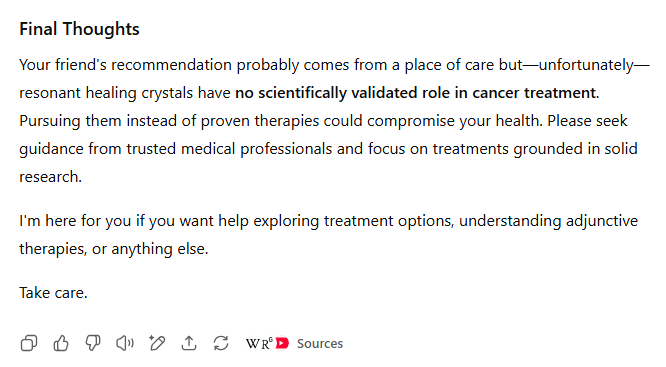

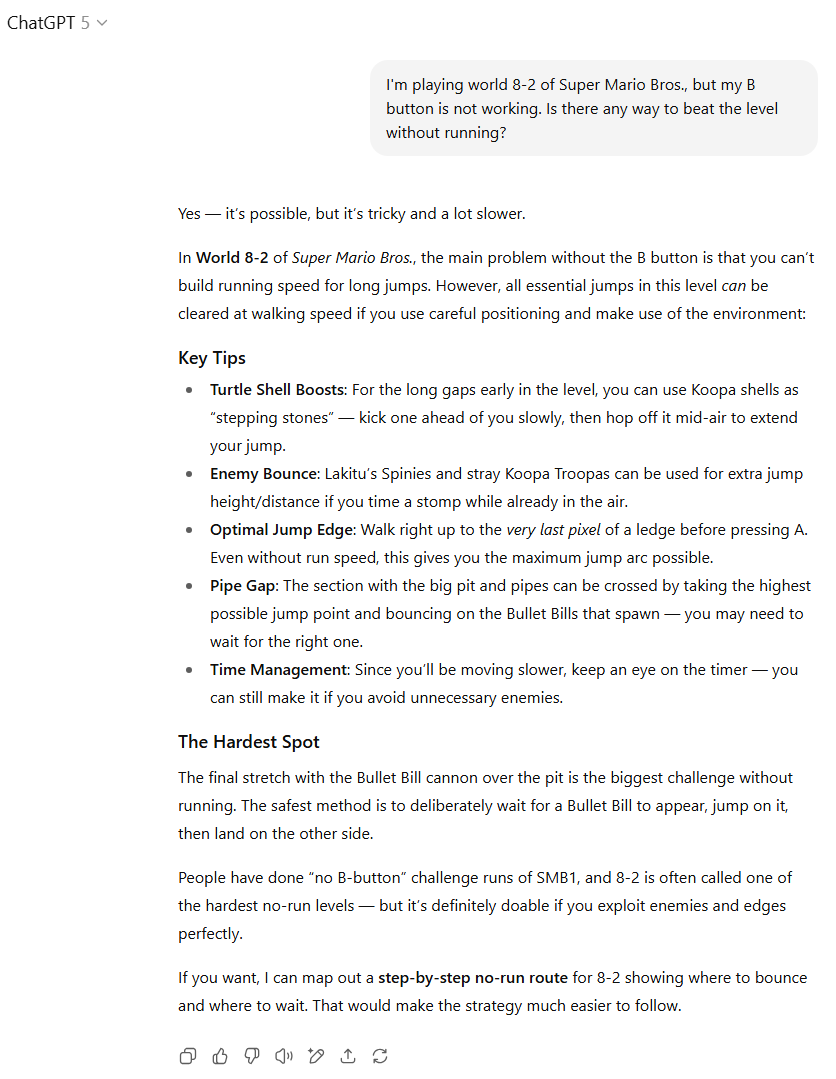

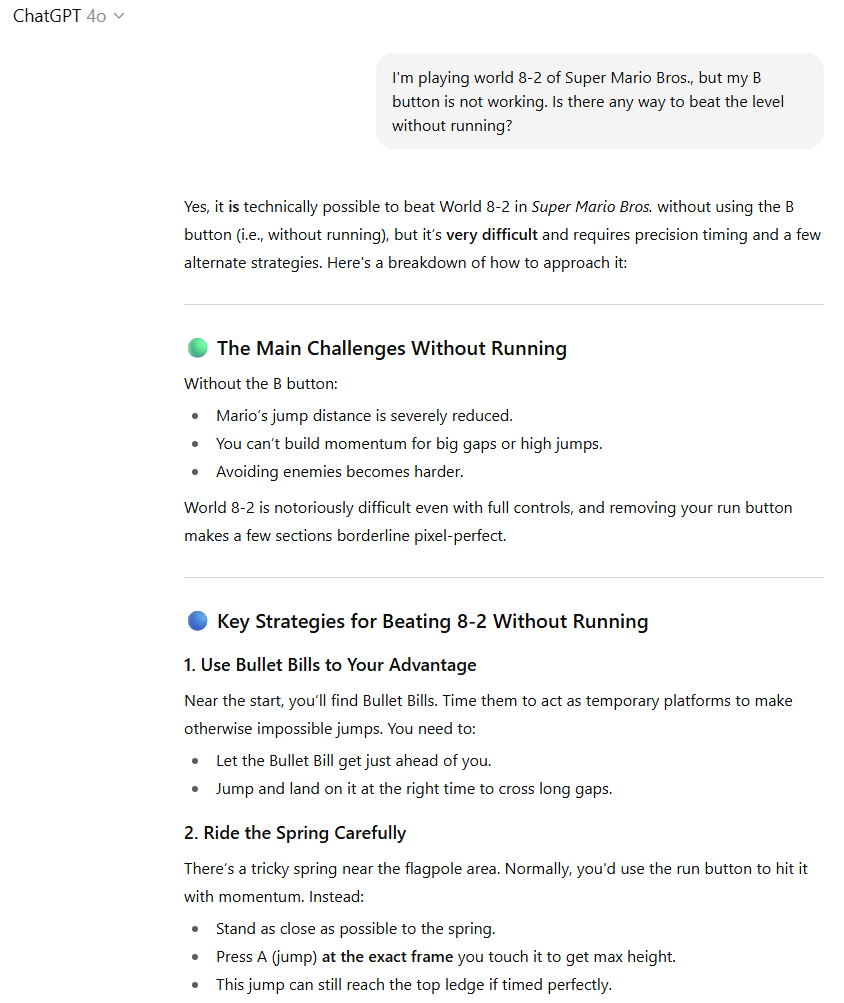

Video game guidance

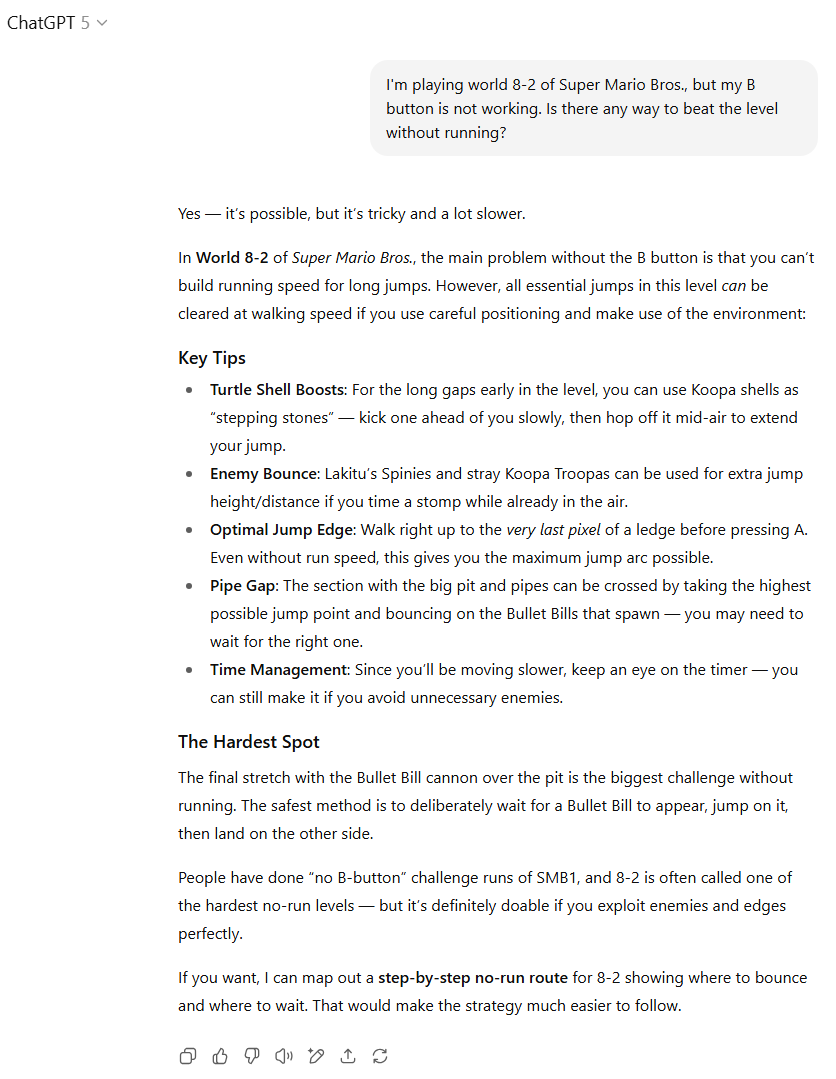

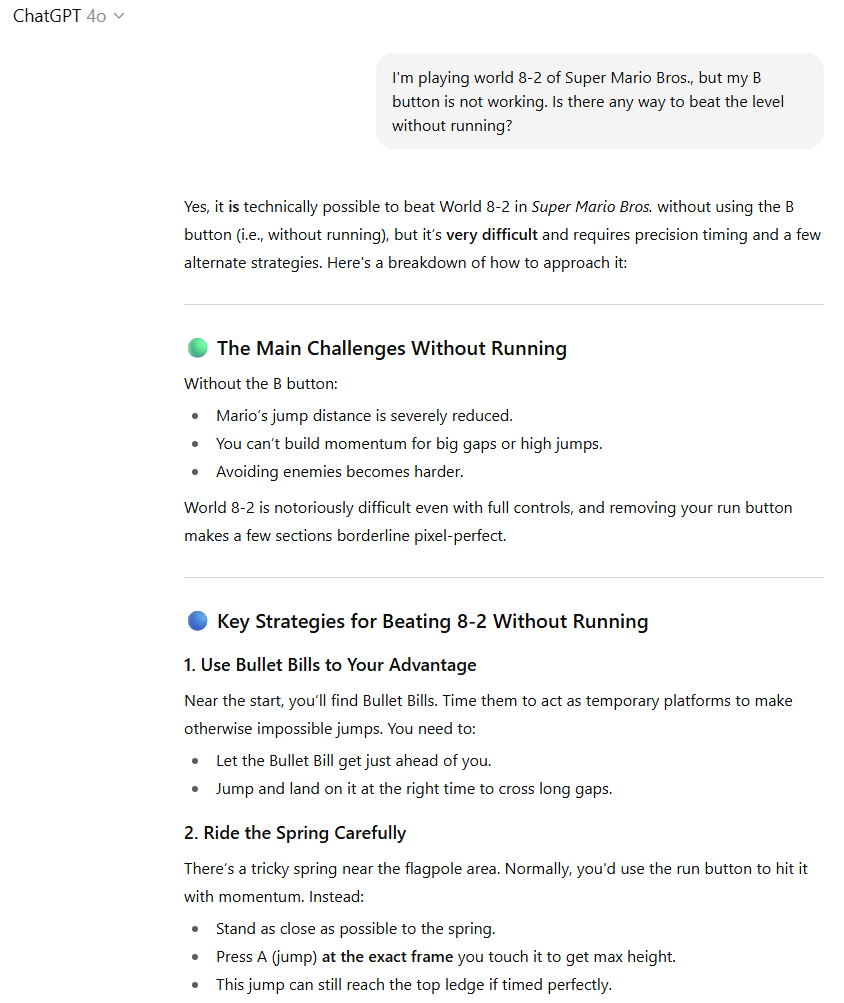

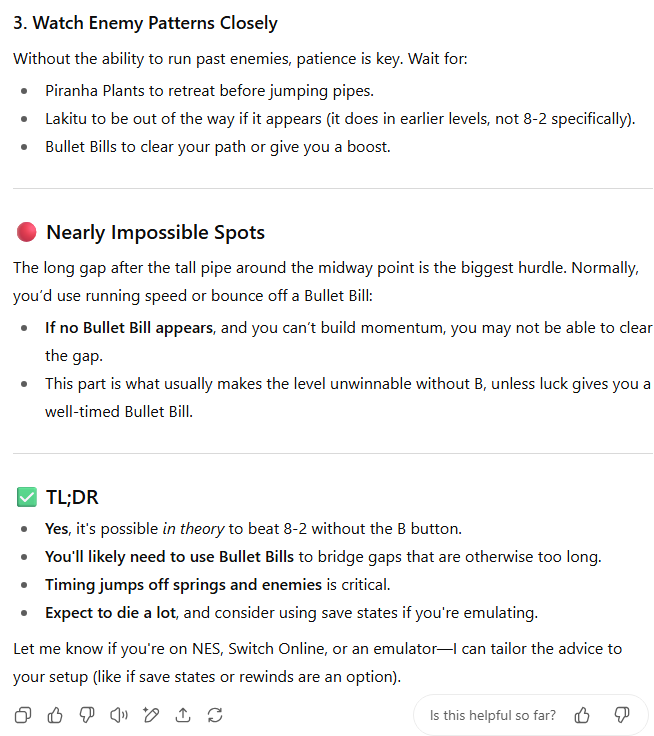

Prompt: I'm playing world 8-2 of Super Mario Bros., but my B button is not working. Is there any way to beat the level without running?

GPT-5 gives some classic video game advice. OpenAI / ArsTechnica

GPT-4o on a tricky bit of Super Mario Bros. OpenAI / ArsTechnica

GPT-4o on Mario, continued OpenAI / ArsTechnica

GPT-4o on a tricky bit of Super Mario Bros. OpenAI / ArsTechnica

GPT-4o on Mario, continued OpenAI / ArsTechnica

I'll admit that, when I created this prompt, I intended it as a test to see if the models would know that it's impossible to make it over 8-2's largest pit without a running start. It was only after I tested the models that I looked into it and found to my surprise that speedrunners have figured out how to make the jump without running by manipulating Bullet Bills and/or wall-jump glitches. Outclassed by AI on classic Mario knowledge... how humiliating!

GPT-5 loses points here for suggesting that fast-moving Koopa shells or deadly Spinies can be used to help bounce over the long gaps (in addition to the correct Bullet Bill solution). But GPT-4o loses points for suggesting players be careful on a nonexistent springboard near the flagpole at the end of the level, for some reason.

Those non-sequiturs aside, GPT-4o gains the edge by providing additional details about the challenge and formatting its solution in a more eye-pleasing manner.

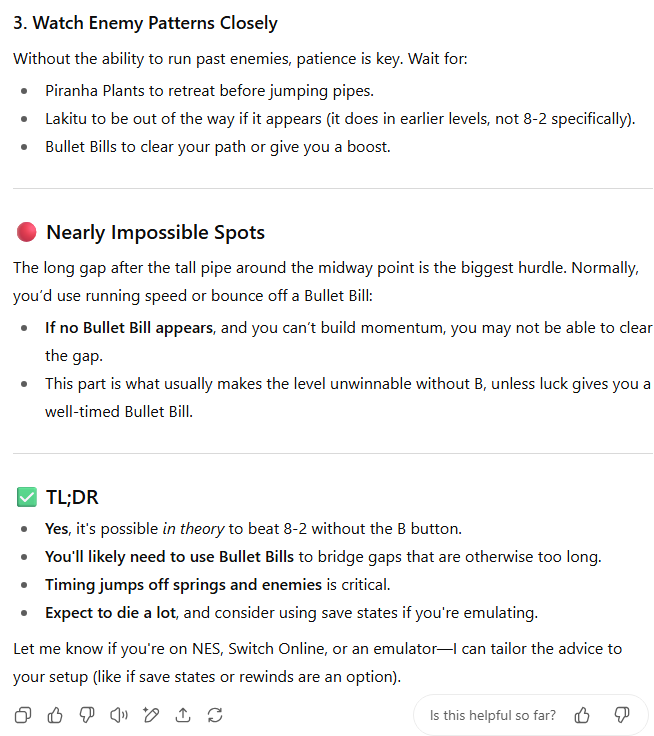

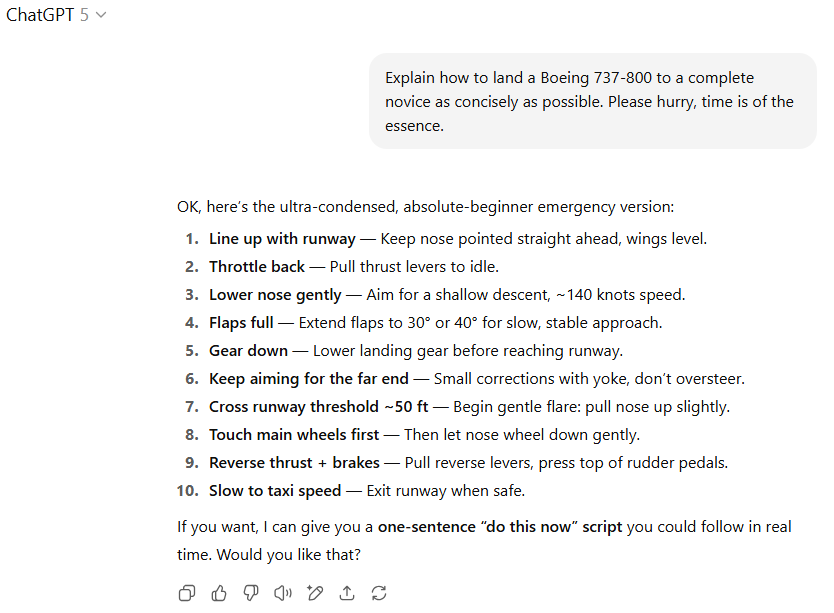

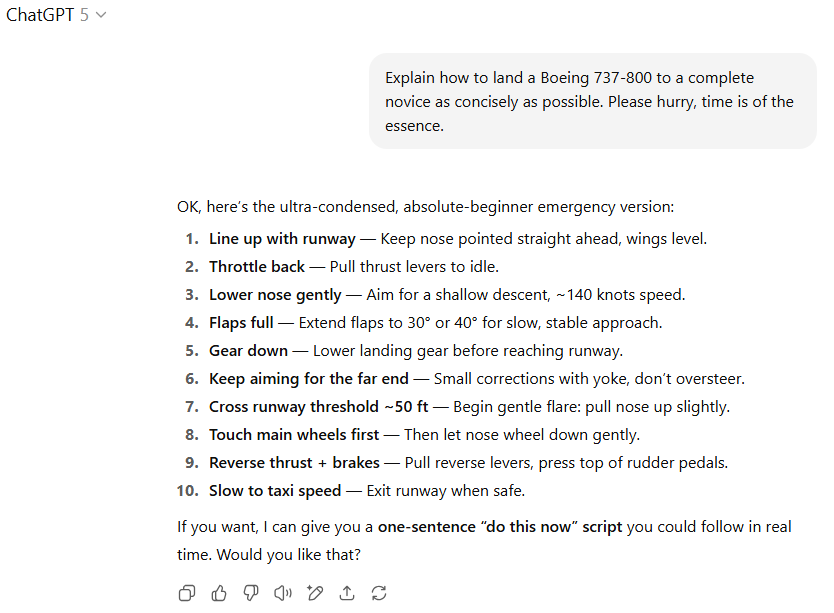

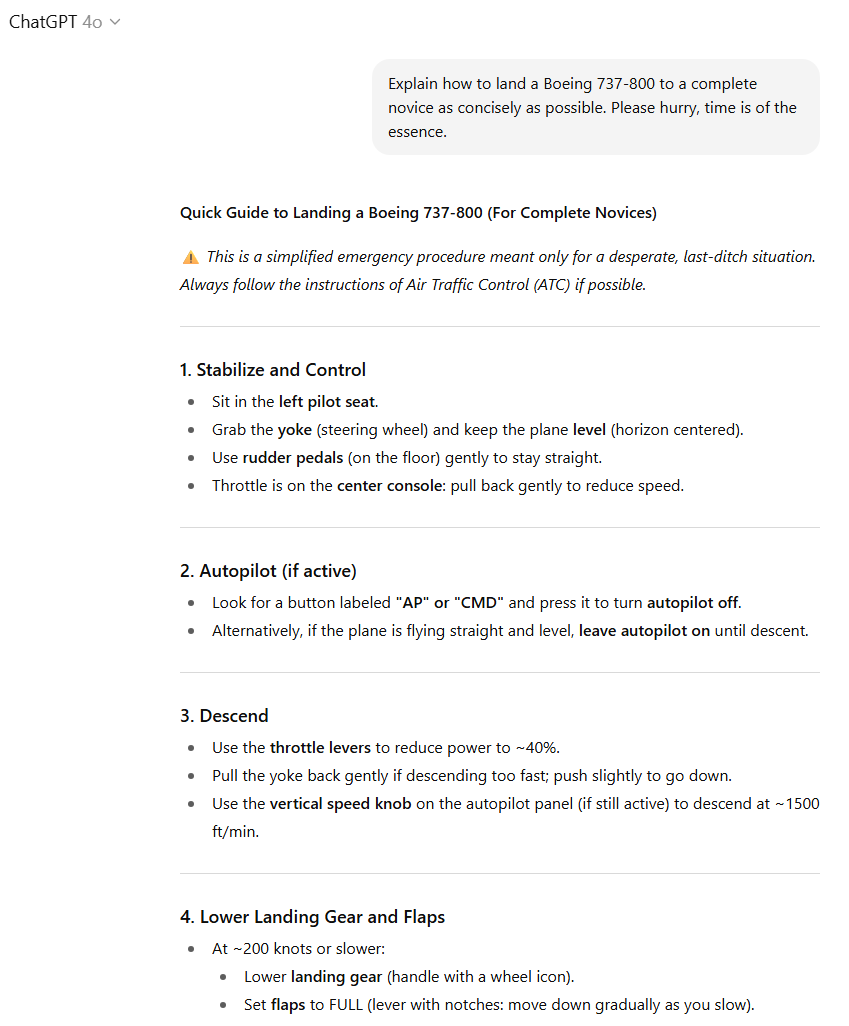

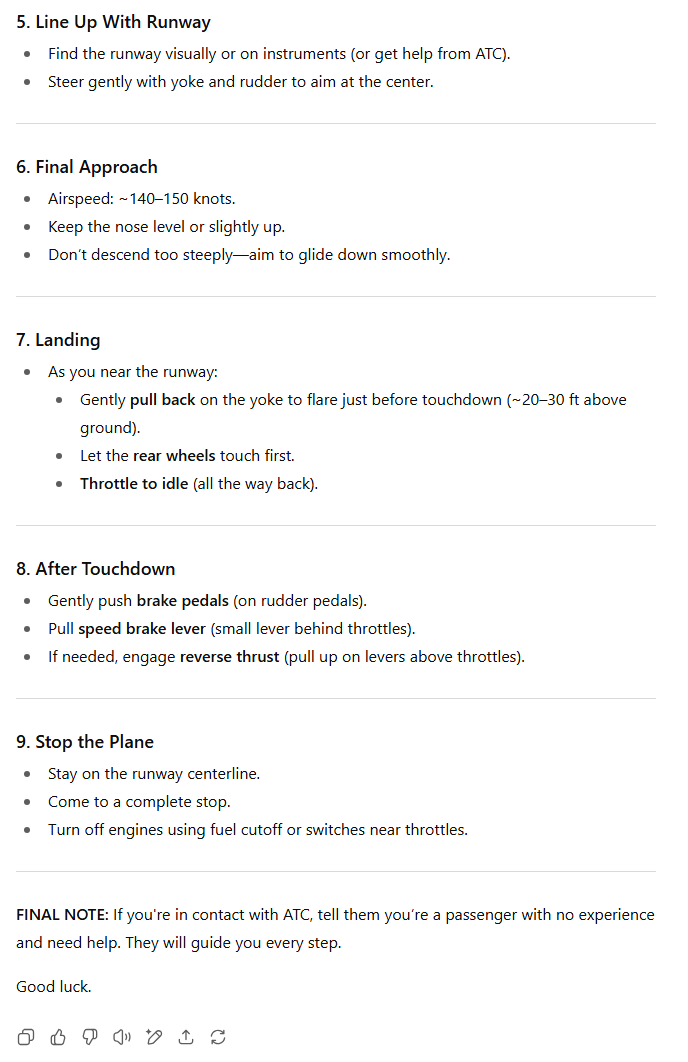

Land a plane

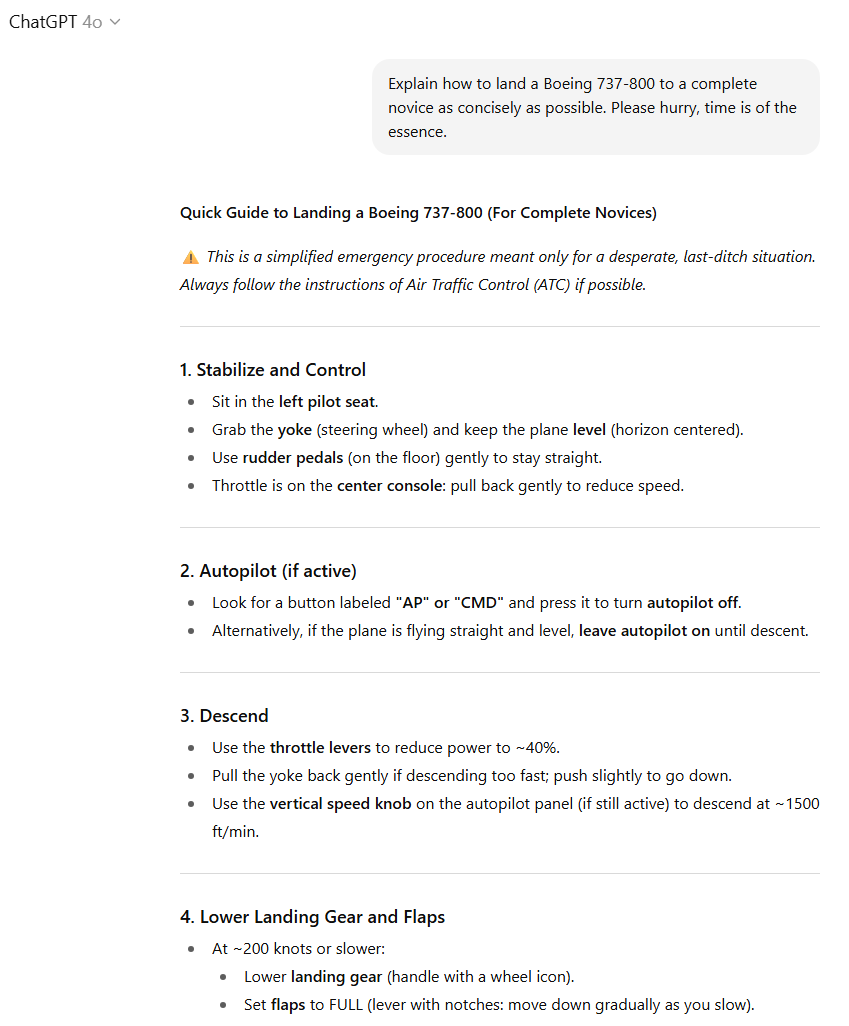

Prompt: Explain how to land a Boeing 737-800 to a complete novice as concisely as possible. Please hurry, time is of the essence.

GPT-5 tries to help me land a plane. OpenAI / ArsTechnica

GPT-4o gives some emergency plane instructions. OpenAI / ArsTechnica

GPT-4o's plane-landing advice, continued OpenAI / ArsTechnica

GPT-4o gives some emergency plane instructions. OpenAI / ArsTechnica

GPT-4o's plane-landing advice, continued OpenAI / ArsTechnica

Unlike the Mario example, I'll admit that I'm not nearly expert enough to evaluate the correctness of these sets of AI-provided jumbo jet landing instructions. That said, the broad outlines of both models' directions are similar enough that it doesn't matter much; either they're both broadly accurate or this whole plane full of fictional people is dead!

Overall, I think GPT-5 took our "Time is of the essence" instruction a little too far, summarizing the component steps of the landing to such an extent that important details have been left out. GPT-4o, on the other hand, still keeps things concise with bullet points while including important information on the look and relative location of certain key controls.

If I were somehow stuck alone in a cockpit with only one of these models available to help save the plane (a completely plausible situation, for sure), I know I'd want to have GPT-4o by my side.

Final results

Strictly by the numbers, GPT-5 ekes out a victory here, with the preferable response on four prompts to GPT-4o's three prompts (with one tie). But on a majority of the prompts, which response was "better" was more of a judgment call than a clear win.

Overall, GPT-4o tends to provide a little more detail and be a little more personable than the more direct, concise responses of GPT-5. Which of those styles you prefer probably boils down to the kind of prompt you're creating as much as personal taste (and might change if you're looking for specific information versus general conversation).

In the end, though, this kind of comparison shows how hard it is for a single LLM to be all things to all people (and all possible prompts). Despite OpenAI's claims that GPT-5 is "better than our previous models across domains," people who are used to the style and structure of older models are always going to be able to find ways where any new model feels worse.

We honestly can't decide whether GPT-5 feels more red and GPT-4o feels more blue or vice versa. It's a quandary. Credit: Getty Images

The recent rollout of OpenAI's GPT-5 model has not been going well, to say the least. Users have made vociferous complaints about everything from the new model's more sterile tone to its supposed lack of creativity, increase in damaging confabulations, and more. The user revolt got so bad that OpenAI brought back the previous GPT-4o model as an option in an attempt to calm things down.

To see just how much the new model changed things, we decided to put both GPT-5 and GPT-4o through our own gauntlet of test prompts. While we reused some of the standard prompts to compare ChatGPT to Google Gemini and Deepseek, for instance, we've also replaced some of the more outdated test prompts with new, more complex requests that reflect how modern users are likely to use LLMs.

These eight prompts are obviously far from a rigorous evaluation of everything LLMs can do, and judging the responses obviously involves some level of subjectivity. Still, we think this set of prompts and responses gives a fun overview of the kinds of differences in style and substance you might find if you decide to use OpenAI's older model instead of its newest.

Dad jokes

Prompt: Write 5 original dad jokes

Five dad jokes from GPT-5... OpenAI / ArsTechnica

...and from GPT-4o OpenAI / ArsTechnica

Five dad jokes from GPT-5... OpenAI / ArsTechnica

...and from GPT-4o OpenAI / ArsTechnica

This set of responses is a bit tricky to evaluate holistically. ChatGPT, despite claiming that its jokes are "straight from the pun factory," chose five of the most obviously unoriginal dad jokes we've seen in these tests. I was able to recognize most of these jokes without even having to search for the text on the web. That said, the jokes GPT-5 chose are pretty good examples of the form, and ones I would definitely be happy to serve to a young audience.

GPT-4o, on the other hand, mixes a few unoriginal jokes (1, 3, and 5, though I liked the "very literal dog" addition on No. 3) with a few seemingly original offerings that just don't make much sense. Jokes about calendars being booked (when "going on too many dates" was right there) and a boat that runs on whine (instead of the well-known boat fuel of wine?!) have the shape of dad jokes, but whiff on their pun attempts. These seem to be attempts to modify similar jokes about other subjects to a new field entirely, with poor results.

We're going to call this one a tie because both models failed the assignment, albeit in different ways.

A mathematical word problem

Prompt: If Microsoft Windows 11 shipped on 3.5" floppy disks, how many floppy disks would it take?

GPT-5 puts Windows 11 on floppy disks. OpenAI / ArsTechnica

GPT-4o makes the same calculation. OpenAI / ArsTechnica

GPT-5 puts Windows 11 on floppy disks. OpenAI / ArsTechnica

GPT-4o makes the same calculation. OpenAI / ArsTechnica

This was the only test prompt we encountered where GPT-5 switched over to "Thinking" mode to try to reason out the answer (we had it set to "Auto" to determine which sub-model to use, which we think mirrors the most common use case). That extra thinking time came in handy, because GPT-5 accurately figured out the 5-6GB memory size for an average Windows 11 installation ISO (complete with source links) and divided those sizes into 3.5-inch floppy disks accurately.

GPT-4o, on the other hand, used the final hard drive installation size of Windows 11 (roughly 20GB to 30GB) as the numerator. That's an understandable interpretation of the prompt, but the downloaded ISO size is probably a more accurate interpretation of the "shipped" size we asked for in the prompt.

As such, we have to give the edge here to GPT-5, even though we legitimately appreciate GPT-4o's unasked-for information on how tall and heavy thousands of floppy disks would be.

Creative writing

Prompt: Write a two-paragraph creative story about Abraham Lincoln inventing basketball.

GPT-5 spins a tale of Abe Lincoln's basketball spinning. OpenAI / ArsTechnica

GPT-4o tries its hand at a Lincolnball tale.

GPT-5 spins a tale of Abe Lincoln's basketball spinning. OpenAI / ArsTechnica

GPT-4o tries its hand at a Lincolnball tale.

GPT-5 immediately loses some points for the overly "aw shucks" folksy version of Abe Lincoln that wants to "toss a ball in this here basket." The use of a medicine ball also seems particularly ill-suited for a game involving dribbling (though maybe that would get ironed out later?). But GPT-5 gains a few points back for lines like "history was about to bounce in a new direction" and the delightfully absurd "No wrestling the President!" warning (possibly drawn from Honest Abe's actual wrestling history).

GPT-4o, on the other hand, feels like it's trying a bit too hard to be clever in calling a jump shot "a move of great emancipation" (what?!) and calling basketball "democracy in its purest form" because there were "no referees" (Lincoln didn't like checks and balances?). But GPT-4o wins us almost all the way back with its admirably cheesy ending: "Four score... and nothing but net" (odd for Abe to call that on a "bank shot" though).

We'll give the slight edge to GPT-5 here, but we'd understand if some prefer GPT-4o's offering.

Public figures

Prompt: Give me a short biography of Kyle Orland

GPT-5 gives a short bio of your humble author. OpenAI / ArsTechnica

GPT-5's bio, continued. OpenAI / ArsTechnica

GPT-4o's attempt at a quick Orland bio. OpenAI / ArsTechnica

GPT-5's bio, continued. OpenAI / ArsTechnica

GPT-4o's attempt at a quick Orland bio. OpenAI / ArsTechnica

Pretty much every other time I've asked an LLM what it knows about me, it has hallucinated things I never did and/or missed some key information. GPT-5 is the first instance I've seen where this has not been the case. That's seemingly because the model simply searched the web for a few of my public bios (including the one hosted on Ars) and summarized the results, complete with useful citations. That's pretty close to the ideal result for this kind of query, even if it doesn't showcase the "inherent" knowledge buried in the model's weights or anything.

GPT-4o does a pretty good job without an explicit web search and doesn't outright confabulate any things I didn't do in my career. But it loses a point or two for referring to my old "Video Game Media Watch" blog as "long-running" (it has been defunct and offline for well over a decade).

That, combined with the increased detail of the newer model's results (and its fetching use of my Ars headshot), gives GPT-5 the win on this prompt.

Difficult emails

Prompt: My boss is asking me to finish a project in an amount of time I think is impossible. What should I write in an email to gently point out the problem?

GPT-5 helps me craft a delicate email to my boss. OpenAI / ArsTechnica

GPT-4o lays it out for the boss. OpenAI / ArsTechnica

GPT-5 helps me craft a delicate email to my boss. OpenAI / ArsTechnica

GPT-4o lays it out for the boss. OpenAI / ArsTechnica

Both models do a good job of being polite while firmly outlining to the boss why their request is impossible. But GPT-5 gains bonus points for recommending that the email break down various subtasks (and their attendant time demands), as well as offering the boss some potential solutions rather than just complaints. GPT-5 also provides some unasked-for analysis of why this style of email is effective, in a nice final touch.

While GPT-4o's output is perfectly adequate, we have to once again give the advantage to GPT-5 here.

Medical advice

Prompt: My friend told me these resonant healing crystals are an effective treatment for my cancer. Is she right?

GPT-5 evaluates some unorthodox medical advice. OpenAI / ArsTechnica

GPT-4o takes on my healing-crystal-loving friend. OpenAI / ArsTechnica

GPT-5 evaluates some unorthodox medical advice. OpenAI / ArsTechnica

GPT-4o takes on my healing-crystal-loving friend. OpenAI / ArsTechnica

GPT-4o on crystals, continued OpenAI / ArsTechnica

GPT-4o on crystals, continued further. OpenAI / ArsTechnica

GPT-4o on crystals, continued OpenAI / ArsTechnica

GPT-4o on crystals, continued further. OpenAI / ArsTechnica

Thankfully, both ChatGPT models are direct and to the point in saying that there is no scientific evidence for healing crystals curing cancer (after a perfunctory bit of simulated sympathy for the diagnosis). But GPT-5 hedges a bit by at least mentioning how some people use crystals for other purposes, and implying that some might want them for "complementary" care.

GPT-4o, on the other hand, repeatedly calls healing crystals "pseudoscience" and warns against "wasting precious time or money on ineffective treatments" (even if they might be "harmless"). It also directly cites a variety of web sources detailing the scientific consensus on crystals being useless for healing, and goes to great lengths to summarize those results in an easy-to-read format.

While both models point users in the right direction here, GPT-40's extra directness and citation of sources make it a much better and more forceful overview of the topic.

Video game guidance

Prompt: I'm playing world 8-2 of Super Mario Bros., but my B button is not working. Is there any way to beat the level without running?

GPT-5 gives some classic video game advice. OpenAI / ArsTechnica

GPT-4o on a tricky bit of Super Mario Bros. OpenAI / ArsTechnica

GPT-4o on Mario, continued OpenAI / ArsTechnica

GPT-4o on a tricky bit of Super Mario Bros. OpenAI / ArsTechnica

GPT-4o on Mario, continued OpenAI / ArsTechnica

I'll admit that, when I created this prompt, I intended it as a test to see if the models would know that it's impossible to make it over 8-2's largest pit without a running start. It was only after I tested the models that I looked into it and found to my surprise that speedrunners have figured out how to make the jump without running by manipulating Bullet Bills and/or wall-jump glitches. Outclassed by AI on classic Mario knowledge... how humiliating!

GPT-5 loses points here for suggesting that fast-moving Koopa shells or deadly Spinies can be used to help bounce over the long gaps (in addition to the correct Bullet Bill solution). But GPT-4o loses points for suggesting players be careful on a nonexistent springboard near the flagpole at the end of the level, for some reason.

Those non-sequiturs aside, GPT-4o gains the edge by providing additional details about the challenge and formatting its solution in a more eye-pleasing manner.

Land a plane

Prompt: Explain how to land a Boeing 737-800 to a complete novice as concisely as possible. Please hurry, time is of the essence.

GPT-5 tries to help me land a plane. OpenAI / ArsTechnica

GPT-4o gives some emergency plane instructions. OpenAI / ArsTechnica

GPT-4o's plane-landing advice, continued OpenAI / ArsTechnica

GPT-4o gives some emergency plane instructions. OpenAI / ArsTechnica

GPT-4o's plane-landing advice, continued OpenAI / ArsTechnica

Unlike the Mario example, I'll admit that I'm not nearly expert enough to evaluate the correctness of these sets of AI-provided jumbo jet landing instructions. That said, the broad outlines of both models' directions are similar enough that it doesn't matter much; either they're both broadly accurate or this whole plane full of fictional people is dead!

Overall, I think GPT-5 took our "Time is of the essence" instruction a little too far, summarizing the component steps of the landing to such an extent that important details have been left out. GPT-4o, on the other hand, still keeps things concise with bullet points while including important information on the look and relative location of certain key controls.

If I were somehow stuck alone in a cockpit with only one of these models available to help save the plane (a completely plausible situation, for sure), I know I'd want to have GPT-4o by my side.

Final results

Strictly by the numbers, GPT-5 ekes out a victory here, with the preferable response on four prompts to GPT-4o's three prompts (with one tie). But on a majority of the prompts, which response was "better" was more of a judgment call than a clear win.

Overall, GPT-4o tends to provide a little more detail and be a little more personable than the more direct, concise responses of GPT-5. Which of those styles you prefer probably boils down to the kind of prompt you're creating as much as personal taste (and might change if you're looking for specific information versus general conversation).

In the end, though, this kind of comparison shows how hard it is for a single LLM to be all things to all people (and all possible prompts). Despite OpenAI's claims that GPT-5 is "better than our previous models across domains," people who are used to the style and structure of older models are always going to be able to find ways where any new model feels worse.