- Регистрация

- 17 Февраль 2018

- Сообщения

- 38 906

- Лучшие ответы

- 0

- Реакции

- 0

- Баллы

- 2 093

Offline

The see-through display is a big downgrade from last year's "Orion" prototype demo.

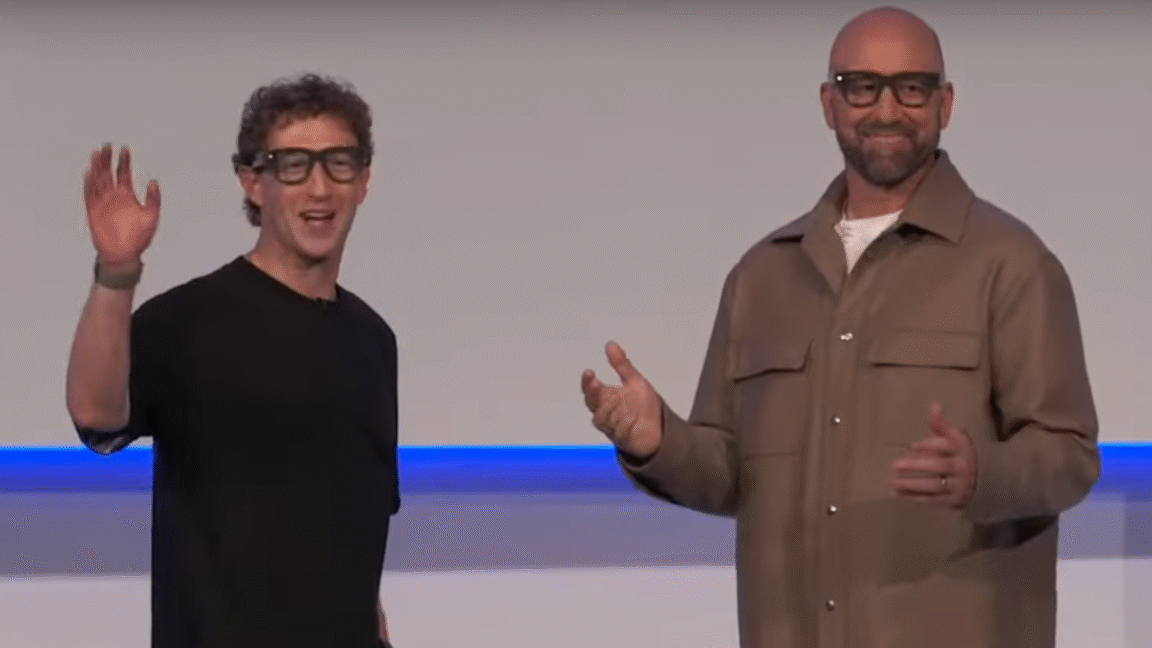

Zuckerberg (left) and Meta CTO Andrew Bosworth show off how cool and natural you can look wearing the Meta Ray-Ban Display. Credit: Meta

At last year's Meta Connect, Mark Zuckerberg focused less on the company's line of Quest VR headsets and more on the "Orion" prototype see-through augmented reality glasses, which he said could launch in some form or another "in the next few years." At the Meta Connect keynote Wednesday evening, though, Zuckerberg announced that the company's Meta Ray-Ban Display AR glasses would be available starting at $799 as soon as Sept. 30.

To be sure, Meta's first commercial smartglasses with a built-in display are a far cry from the Orion prototype Zuckerberg showed off last year. The actual "display" part of the Ray-Ban Display is a paltry 600×600 resolution square that updates at just 30 Hz and takes up a tiny 20 degree portion of only the right eyepiece. Compared to the 70 degree field-of-view and head-tracked stereoscopic 3D "hologram" effect shown on the Orion lenses, that's a little disappointing.

Still, Zuckerberg was able to call the 42 pixels per degree (PPD) you get on the Ray-Ban Display's display "very high resolution," in a sense (the Meta Quest 3 tops out at around 25 PPD across its much larger display). And hands-on reports suggest the bright 5,000 nit display is viewable even in bright outdoor scenarios, thanks in part to Transitions lenses that automatically darken to block outside light.

It's a lot less chunky than a VR headset, that's for sure.

While Meta's new AR glasses look a little chunkier on your face than standard sunglasses, the slim form factor is still in a completely different class from failed experiments like Google Glass or "mixed reality" (MR) headsets like the Quest 3 or Apple Vision Pro (which rely on pass-through video, rather than clear lenses, for a view of the real world). And at just 70 grams, Meta's new glasses are also much more likely to sit comfortably on your face all day than bulky MR headsets that can be seven times as heavy (though the reported six-hour battery life might require charging via an included foldable battery case).

See things in a new way

Zuckerberg's on-stage Ray-Ban Display demo was missing the optical hand-tracking seen in last year's Orion demonstrations. But the new glasses do integrate the "neural interface" wristband that Meta has been teasing for years. By detecting small movements of the wrist muscles, Meta says the neural interface will let users quickly flick through menus and select options without the need to hold a Magic Leap-style controller or call out voice commands in a crowded room.

Zuckerberg also showed how the neural interface can be used to compose messages (on WhatsApp, Messenger, Instagram, or via a connected phone's messaging apps) by following your mimed "handwriting" across a flat surface. Though this feature reportedly won't be available at launch, Zuckerberg said he had gotten up to "about 30 words per minute" in this silent input mode.

The most impressive part of Zuckerberg's on-stage demo that will be available at launch was probably a "live caption" feature that automatically types out the words your partner is saying in real-time. The feature reportedly filters out background noise to focus on captioning just the person you're looking at, too.

A Meta video demos how live captioning works on the Ray-Ban Display (though the field-of-view on the actual glasses is likely much more limited). Credit: Meta

Beyond those "gee whiz" kinds of features, the Meta Ray-Ban Display can basically mirror a small subset of your smartphone's apps on its floating display. Being able to get turn-by-turn directions or see recipe steps on the glasses without having to glance down at a phone feels like genuinely useful new interaction modes. Using the glasses display as a viewfinder to line up a photo or video (using the built-in 12 megapixel, 3x zoom camera) also seems like an improvement over previous display-free smartglasses.

But accessing basic apps like weather, reminders, calendar, and emails on your tiny glasses display strikes us as probably less convenient than just glancing at your phone. And hosting video calls via the glasses by necessity forces your partner to see what you're seeing via the outward-facing camera, rather than seeing your actual face.

Meta also showed off some pie-in-the-sky video about how future "Agentic AI" integration would be able to automatically make suggestions and note follow-up tasks based on what you see and hear while wearing the glasses. For now, though, the device represents what Zuckerberg called "the next chapter in the exciting story of the future of computing," which should serve to take focus away from the failed VR-based metaverse that was the company's last "future of computing."

Zuckerberg (left) and Meta CTO Andrew Bosworth show off how cool and natural you can look wearing the Meta Ray-Ban Display. Credit: Meta

At last year's Meta Connect, Mark Zuckerberg focused less on the company's line of Quest VR headsets and more on the "Orion" prototype see-through augmented reality glasses, which he said could launch in some form or another "in the next few years." At the Meta Connect keynote Wednesday evening, though, Zuckerberg announced that the company's Meta Ray-Ban Display AR glasses would be available starting at $799 as soon as Sept. 30.

To be sure, Meta's first commercial smartglasses with a built-in display are a far cry from the Orion prototype Zuckerberg showed off last year. The actual "display" part of the Ray-Ban Display is a paltry 600×600 resolution square that updates at just 30 Hz and takes up a tiny 20 degree portion of only the right eyepiece. Compared to the 70 degree field-of-view and head-tracked stereoscopic 3D "hologram" effect shown on the Orion lenses, that's a little disappointing.

Still, Zuckerberg was able to call the 42 pixels per degree (PPD) you get on the Ray-Ban Display's display "very high resolution," in a sense (the Meta Quest 3 tops out at around 25 PPD across its much larger display). And hands-on reports suggest the bright 5,000 nit display is viewable even in bright outdoor scenarios, thanks in part to Transitions lenses that automatically darken to block outside light.

It's a lot less chunky than a VR headset, that's for sure.

While Meta's new AR glasses look a little chunkier on your face than standard sunglasses, the slim form factor is still in a completely different class from failed experiments like Google Glass or "mixed reality" (MR) headsets like the Quest 3 or Apple Vision Pro (which rely on pass-through video, rather than clear lenses, for a view of the real world). And at just 70 grams, Meta's new glasses are also much more likely to sit comfortably on your face all day than bulky MR headsets that can be seven times as heavy (though the reported six-hour battery life might require charging via an included foldable battery case).

See things in a new way

Zuckerberg's on-stage Ray-Ban Display demo was missing the optical hand-tracking seen in last year's Orion demonstrations. But the new glasses do integrate the "neural interface" wristband that Meta has been teasing for years. By detecting small movements of the wrist muscles, Meta says the neural interface will let users quickly flick through menus and select options without the need to hold a Magic Leap-style controller or call out voice commands in a crowded room.

Zuckerberg also showed how the neural interface can be used to compose messages (on WhatsApp, Messenger, Instagram, or via a connected phone's messaging apps) by following your mimed "handwriting" across a flat surface. Though this feature reportedly won't be available at launch, Zuckerberg said he had gotten up to "about 30 words per minute" in this silent input mode.

The most impressive part of Zuckerberg's on-stage demo that will be available at launch was probably a "live caption" feature that automatically types out the words your partner is saying in real-time. The feature reportedly filters out background noise to focus on captioning just the person you're looking at, too.

A Meta video demos how live captioning works on the Ray-Ban Display (though the field-of-view on the actual glasses is likely much more limited). Credit: Meta

Beyond those "gee whiz" kinds of features, the Meta Ray-Ban Display can basically mirror a small subset of your smartphone's apps on its floating display. Being able to get turn-by-turn directions or see recipe steps on the glasses without having to glance down at a phone feels like genuinely useful new interaction modes. Using the glasses display as a viewfinder to line up a photo or video (using the built-in 12 megapixel, 3x zoom camera) also seems like an improvement over previous display-free smartglasses.

But accessing basic apps like weather, reminders, calendar, and emails on your tiny glasses display strikes us as probably less convenient than just glancing at your phone. And hosting video calls via the glasses by necessity forces your partner to see what you're seeing via the outward-facing camera, rather than seeing your actual face.

Meta also showed off some pie-in-the-sky video about how future "Agentic AI" integration would be able to automatically make suggestions and note follow-up tasks based on what you see and hear while wearing the glasses. For now, though, the device represents what Zuckerberg called "the next chapter in the exciting story of the future of computing," which should serve to take focus away from the failed VR-based metaverse that was the company's last "future of computing."