- Регистрация

- 17 Февраль 2018

- Сообщения

- 40 838

- Лучшие ответы

- 0

- Реакции

- 0

- Баллы

- 8 093

Offline

Runway joins a competitive field alongside Google, Nvidia, and others.

Runway claims GWM Worlds can simulate movement in vehicles or boats, not just on foot. Credit: Runway

AI company Runway has announced what it calls its first world model, GWM-1. It’s a significant step in a new direction for a company that has made its name primarily on video generation, and it’s part of a wider gold rush to build a new frontier of models as large language models and image and video generation move into a refinement phase, no longer an untapped frontier.

GWM-1 is a blanket term for a trio of autoregression models, each built on top Runway’s Gen-4.5 text-to-video generation model and then post-trained with domain-specific data for different kinds of applications. Here’s what each does.

Runway’s world model announcement livestream video.

GWM Worlds

GWM Worlds offers an interface for digital environment exploration with real-time user input that affects the generation of coming frames, which Runway suggests can remain consistent and coherent “across long sequences of movement.”

Users can define the nature of the world—what it contains and how it appears—as well as rules like physics. They can give it actions or changes that will be reflected in real-time, like camera movements or descriptions of changes to the environment or the objects in it. As the methodology here is basically an advanced form of frame prediction, it might be a stretch to say these are full-on world simulations, but the claim is that they’re reliable enough to be usable as such.

Potential applications include pre-visualization and early iteration for game design and development, generation of virtual reality environments, or educational explorations of historical spaces.

There’s also a major use case that takes this outside Runway’s usual area of focus: World models like this can be used to train AI agents of various types, including robots.

GWM Robotics

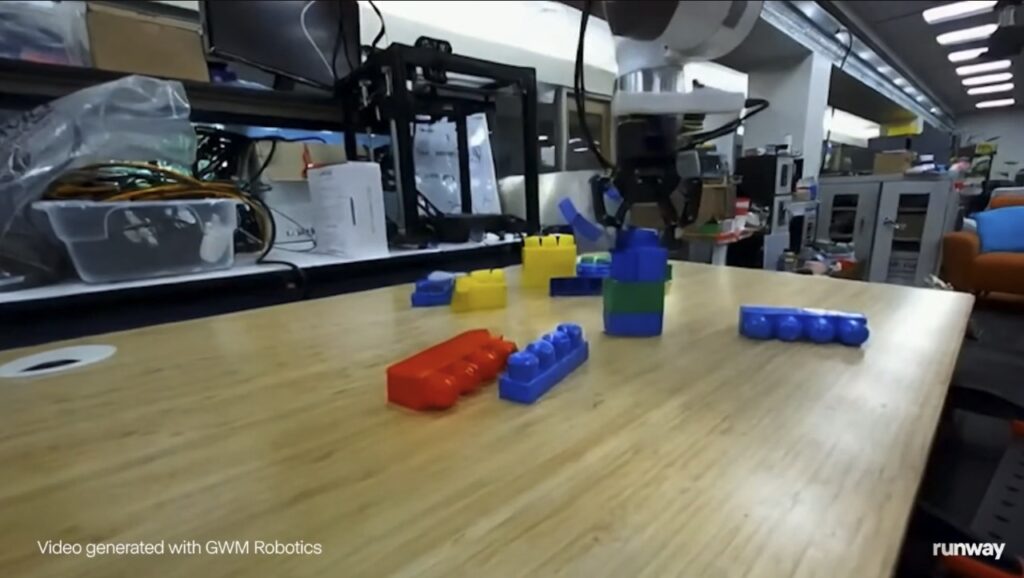

The second model, GWM Robotics, does just that. It can be used “to generate synthetic training data that augments your existing robotics datasets across multiple dimensions, including novel objects, task instructions, and environmental variations.”

A video generated with Runway’s GWM Robotics. Credit: Runway

There are a couple of key applications for this in the field of robotics. First, a world model could be used for training scenarios that are otherwise very hard to reliably reproduce in the physical world, such as varying weather conditions. There’s also policy evaluation—testing control policies entirely in a simulated world before real-world testing, which is safer and cheaper.

Runway has put together a Python SDK for its robotics world model API that is currently available on a per-request basis.

GWM Avatars

Lastly, GWM Avatars combines generative video and speech in a unified model to produce human-like avatars that emote and move naturally both while speaking and listening. Runway claims they can maintain “extended conversations without quality degradation”—a mighty feat if true. It’s coming to the web app and the API in the future.

Faces animated semi-realistically during extended conversation, Runway says. Credit: Runway

“General”-ish

Those who have described “general” world models are aiming for something grand: a multi-purpose, foundational model that works out of the box to simulate many types of environments, usable for any tasks, agents, and applications across multiple domains.

World models are definitely not new, but the idea that they can be that general is a relatively recent ambition, and it’s often framed as a stepping stone to artificial general intelligence (AGI)—though there’s no evidence yet that they will in fact lead there for most definitions of the term.

Runway didn’t use AGI framing in this announcement as others like Google’s DeepMind have. That said, CEO Cristóbal Valenzuela did take to X to describe GWM-1 as “a major step toward universal simulation.” That itself is a lofty end point, as we don’t yet have any evidence the current path will lead to something that comprehensive, and you also have to consider that there’s not much consensus on the definition of “universal.”

Even using the word “general” has an air of aspiration to it. You would expect a general world model to be, well, one model—but in this case, we’re looking at three distinct, post-trained models. That caveats the general-ness a bit, but Runway says that it’s “working toward unifying many different domains and action spaces under a single base world model.”

A competitive field

And that brings us to another important consideration: With GWM-1, Runway is entering a competitive gold-rush space where its differentiators and competitive advantages are less clear than they were for video. With video, Runway has been able to make major inroads in film/television, advertising, and other industries because its founders are perceived as being more rooted in those creative industries than most competitors, and they’ve designed tools with those industries in mind.

There are indeed hypothetical applications of world models in film, television, advertising, and game development—but it was apparent from Runway’s livestream that the company is also looking at applications in robotics as well as physics and life sciences research, where competitors are already well-established and where we’ve seen increasing investment in recent months.

Many of those competitors are big tech companies with massive resource advantages over Runway. Runway was one of the first to market with a sellable product, and its aggressive efforts to court industry professionals directly has so far allowed it to overcome those advantages in video generation, but it remains to be seen how things will play out with world models, where it doesn’t enjoy either advantage any more than the other entrants.

Regardless, the GWM-1 advancements are impressive—especially if Runway’s claims about consistency and coherence over longer stretches of time are true.

Runway also used its livestream to announce new Gen 4.5 video generation capabilities, including native audio, audio editing, and multi-shot video editing. Further, it announced a deal with CoreWeave, a cloud computing company with an AI focus. The deal will see Runway utilizing Nvidia’s GB300 NVL72 racks on CoreWeave’s cloud infrastructure for future training and inference.

Runway claims GWM Worlds can simulate movement in vehicles or boats, not just on foot. Credit: Runway

AI company Runway has announced what it calls its first world model, GWM-1. It’s a significant step in a new direction for a company that has made its name primarily on video generation, and it’s part of a wider gold rush to build a new frontier of models as large language models and image and video generation move into a refinement phase, no longer an untapped frontier.

GWM-1 is a blanket term for a trio of autoregression models, each built on top Runway’s Gen-4.5 text-to-video generation model and then post-trained with domain-specific data for different kinds of applications. Here’s what each does.

Runway’s world model announcement livestream video.

GWM Worlds

GWM Worlds offers an interface for digital environment exploration with real-time user input that affects the generation of coming frames, which Runway suggests can remain consistent and coherent “across long sequences of movement.”

Users can define the nature of the world—what it contains and how it appears—as well as rules like physics. They can give it actions or changes that will be reflected in real-time, like camera movements or descriptions of changes to the environment or the objects in it. As the methodology here is basically an advanced form of frame prediction, it might be a stretch to say these are full-on world simulations, but the claim is that they’re reliable enough to be usable as such.

Potential applications include pre-visualization and early iteration for game design and development, generation of virtual reality environments, or educational explorations of historical spaces.

There’s also a major use case that takes this outside Runway’s usual area of focus: World models like this can be used to train AI agents of various types, including robots.

GWM Robotics

The second model, GWM Robotics, does just that. It can be used “to generate synthetic training data that augments your existing robotics datasets across multiple dimensions, including novel objects, task instructions, and environmental variations.”

A video generated with Runway’s GWM Robotics. Credit: Runway

There are a couple of key applications for this in the field of robotics. First, a world model could be used for training scenarios that are otherwise very hard to reliably reproduce in the physical world, such as varying weather conditions. There’s also policy evaluation—testing control policies entirely in a simulated world before real-world testing, which is safer and cheaper.

Runway has put together a Python SDK for its robotics world model API that is currently available on a per-request basis.

GWM Avatars

Lastly, GWM Avatars combines generative video and speech in a unified model to produce human-like avatars that emote and move naturally both while speaking and listening. Runway claims they can maintain “extended conversations without quality degradation”—a mighty feat if true. It’s coming to the web app and the API in the future.

Faces animated semi-realistically during extended conversation, Runway says. Credit: Runway

“General”-ish

Those who have described “general” world models are aiming for something grand: a multi-purpose, foundational model that works out of the box to simulate many types of environments, usable for any tasks, agents, and applications across multiple domains.

World models are definitely not new, but the idea that they can be that general is a relatively recent ambition, and it’s often framed as a stepping stone to artificial general intelligence (AGI)—though there’s no evidence yet that they will in fact lead there for most definitions of the term.

Runway didn’t use AGI framing in this announcement as others like Google’s DeepMind have. That said, CEO Cristóbal Valenzuela did take to X to describe GWM-1 as “a major step toward universal simulation.” That itself is a lofty end point, as we don’t yet have any evidence the current path will lead to something that comprehensive, and you also have to consider that there’s not much consensus on the definition of “universal.”

Even using the word “general” has an air of aspiration to it. You would expect a general world model to be, well, one model—but in this case, we’re looking at three distinct, post-trained models. That caveats the general-ness a bit, but Runway says that it’s “working toward unifying many different domains and action spaces under a single base world model.”

A competitive field

And that brings us to another important consideration: With GWM-1, Runway is entering a competitive gold-rush space where its differentiators and competitive advantages are less clear than they were for video. With video, Runway has been able to make major inroads in film/television, advertising, and other industries because its founders are perceived as being more rooted in those creative industries than most competitors, and they’ve designed tools with those industries in mind.

There are indeed hypothetical applications of world models in film, television, advertising, and game development—but it was apparent from Runway’s livestream that the company is also looking at applications in robotics as well as physics and life sciences research, where competitors are already well-established and where we’ve seen increasing investment in recent months.

Many of those competitors are big tech companies with massive resource advantages over Runway. Runway was one of the first to market with a sellable product, and its aggressive efforts to court industry professionals directly has so far allowed it to overcome those advantages in video generation, but it remains to be seen how things will play out with world models, where it doesn’t enjoy either advantage any more than the other entrants.

Regardless, the GWM-1 advancements are impressive—especially if Runway’s claims about consistency and coherence over longer stretches of time are true.

Runway also used its livestream to announce new Gen 4.5 video generation capabilities, including native audio, audio editing, and multi-shot video editing. Further, it announced a deal with CoreWeave, a cloud computing company with an AI focus. The deal will see Runway utilizing Nvidia’s GB300 NVL72 racks on CoreWeave’s cloud infrastructure for future training and inference.